When Rubber Monkey Software of New Zealand asked me to review their filmConvert software, it occurred to me that this was an opportunity to ask some deeper questions about film stock emulation products that never seem to get asked: not just “how,” but “why?”

I’m not much of a product reviewer. I know people who can drill very deeply into a product and produce a review that is, in effect, a tutorial in how to use that product as well as a solid list of its strengths and weaknesses. Sadly I don’t have that kind of patience. These days I find myself more interested in delving into the philosophy of a product like filmConvert more than the details of how to use it. filmConvert is a very easy tool to learn and, based on my experiments with it, it works very well indeed. It’s one thing to learn a tool, though, and quite another to learn why it works. The answer to “why” often leads to more generalized knowledge about how things–like cameras and color grading tools–generally work, and I find that kind of information incredibly useful on its own.

Rubber Monkey software is based in New Zealand. Lance Lones, one of their principals who has a strong background in visual effects as well as color technology, took a lot of time to answer my emailed questions in great detail, for which I am truly thankful. My questions are in bold, followed by Lance’s responses.

Art: Who is Rubber Monkey?

Lance: The company was formed by Nigel Stanford and Craig Herring here in Wellington. Nigel is keenly interested in filmmaking, and has executive produced and produced a few films here in New Zealand, as well as abroad, including some great things like “Timescapes” with Tom Lowe, and “Second Hand Wedding” here in New Zealand, while Craig is an old school video game developer. I came along a little while later, and am also hugely keen on filmmaking. One of my more useless degrees is in fact, a Visual Arts Media degree from the University of California, San Diego. Another useless degree is in Physics.

I was introduced to Craig and Nigel shortly after I finished working on the VFX for “Avatar” at Weta Digital back in 2009, and have also produced and shot a few short films myself, so I love the whole process, from storytelling to shooting to editing to VFX.

FilmConvert’s Lance Lones.

Lance: FilmConvert was actually Nigel’s brainstom, and we haven’t looked back since. Rounding out our development team, just a while ago, we also brought in another great senior developer by the name of Loren Brookes, who hails from the world of visual effects as well, and who has been doing some amazing stuff with our hardware acceleration projects.

Art: How did you become interested in writing FilmConvert? What need did you see that had to be met?

Lance: Bizarrely, or maybe not so bizarrely, FilmConvert really came about because we wanted to use it ourselves!

If you think back just a few years, and it has been only a few years, our biggest challenge then was really how to get the look out of the camera that we could see in our heads, particularly with things like the RED and Viper cameras. At some sort of random point there was this ah-ha moment where we said, “Hey, let’s just make ’em look like film.” At the time we had no idea just how complete and swift the final digital filmmaking revolution would be, so the fact that we accurately captured the look and feel of a technology that’s becoming increasingly rare was almost by accident.

So although I’d like to say this was some great plan, it was actually great serendipity instead. It hit directly on some compelling themes that I love: great science, preservation of a dying technology through more technology, and bringing tools to filmmakers everywhere, and not just to the pointy end of the “A” list.

We just really love film, and somehow wanted to have film in our digital cameras.

Profiling cameras in the “lab.”

Art: How do you define the “film” look, and–more importantly, perhaps–how do you define a look that screams “video”?

Lance: Film look is indeed sort of a strange thing to try and define. On a technical level there are very specific colour responses and associated printing responses. But that’s really only half of the story, because the real arbiter of a film look is this funny plastic thing called the human brain which does all sorts of odd things. Both it and the human eye adapt over time, so the exact same thing will look different depending on a number of things such as how long you’ve been sitting in the theatre, whether or not it was sunny or cloudy outside the theatre, how dark the theatre is, and what colour the walls are, just to name a few.

So, coming back to film… well, it’s hard to say what the film look is. It’s not the exact colours that are important, but moreover film has what I call a more “organic” feel: the highlights roll off nicely, the shadows roll under nicely, there’s a strange twist in the middle of the colour response, and a curious lack of real deep blues. When running through a projector, the fact that the photosites move around from frame to frame (grain!) adds to the effect, which your brain puts together and screams “film!”

A strict video response seems to miss this somehow. The colours almost always pop too much, the response isn’t quite right, and the exposure curve crashes to a stop right in the highlights. The fact that the filmic chemical process works like our eyes, with a logarithmic response that doesn’t clip in any hard way, adds to that feel.

To some degree it’s almost a philosophical thing. Film is sort of that mystical allegory of the cave thing reality presented to us by filmmakers through a chemical process, which is how life works at it’s most fundamental level. That’s what film does for you. It’s a little bit nutty perhaps, and not easily defined, but the weird organic-like nature of this process is to me part of the fun of filmmaking.

Art: You said that film has a “strange twist” in the middle of the color response. Can you tell me more about that?

Lance: It turns out that in most film stocks the responses of the various layers, and the filters in between, introduce a coupling effect. This makes the neutral axis move around a bit in terms of it’s final colour as it goes from low to high exposures, and which I sort of term a twist in the color response, as it’s not exactly neutral all the way up. Electronic sensors aren’t coupled in this manner, and each colour channel responds linearly with the number of photons coming in, so they don’t exhibit this sort of response normally nor is this usually reproduced in cameras’ colour processing.

Art: Technically, how do you reproduce the looks of specific film stocks?

Lance: Ah, this is an easy question. As a scientist, I measure it! Science is cool that way.

Well, okay, it’s not in fact all that easy at all, as there are all sorts of processes in the middle that affect the way that film responds and is perceived. But at the base level we take a film stock, expose it with some known and calibrated colours, and then measure the resulting film densities. We then introduce a wavelength based profile to model the layers, and build a negative, and then a print from that same data set.

One of the best days here was the first time we punched up the negative model and, out of curiosity, had it produce an RGB negative image using a film illuminant and a set of human perception values. Without any fussing the thing showed up orange, just like a real negative. Science is awesome that way!

Profiling the Canon C100.

Lance: To this stack of processes, though, is added a little secret sauce which is the real technical issue that we had to overcome. What we’ve got is a detailed perceptual model that’s added on top of this wavelength-based and quite accurate film model. So, for example, the white point of a piece of film and the white point of your monitor are vastly different, both in actual luminosity and actual color. We introduce a white point adaptation model to move the measured film white point more towards a Rec 709 white point so that it’ll sit natively in your monitor space a little more nicely.

We also have a gamut and exposure matching model that adapts colours that film can display to a similar colour in your monitor’s gamut space, and importantly, we do the same thing with the source camera, all in such a way that we’re trying to maintain the same perceptual intent and richness of what film does. It comes back to that brain perceptual model thing again.

While we’re reproducing film with a huge degree of accuracy, we’re not in fact exactly reproducing the exact colours that you would see in a cinema. We have to adapt it, otherwise footage viewed on a computer or HD monitor would seem murky and a bit yellow and you’d think something was wrong. It’s all done with a massive amount of care as we are hugely pedantic about reproducing the oddities of the chemical film process and ensuring the accuracy of that part of the model.

The computation is strangely expensive but, in the end, this real-world data driven model is the core value that we’re aiming for, and which sets us apart from most of our competition. It’s massively worth the effort.

Art: I’ve noticed that one aspect of the film look is that saturation decreases with exposure, whereas video saturation increases until it clips. Is this something you compensate for in your software?

Lance: Indeed, some of these effects we are able to simulate. Part of the difficulty though, is that, in this example, the dynamic range of the film negative/print stock lets highlights roll off really nicely while the average video camera won’t have the same amount of available overhead for super bright highlights. We’ll pull the simulation quietly into a more film-like model, but we won’t force it to match the full film effect as that would unduly affect more of the on-exposure range.

A side note from Art:

Because every HD camera has a different amount of overexposure “headroom,” or portion of its dynamic range allocated to the brightness values between middle gray and maximum highlight exposure, that soft film roll-off has to be tailored to work with every camera.

For example, if a film stock normally rolls off highlights across the top three stops of its seven stops of overexposure headroom then this same model might work marvelously with a camera that offers the same seven stops of overexposure headroom in which to work, whereas a camera with four stops of overexposure headroom may require that roll-off to be further compressed in order to achieve the same look with less room in which to work.

Lance: So it’s part of that delicate model balance between technical correctness, which would see video highlights clip hard because of where the real world exposure is, and modeling film behavior, where we adjust highlights that technically shouldn’t change. We’re a bit in between, which is part of our whole philosophy: strictly emulating the things that matter, and modeling the perceptual behavior of things that don’t but which complete the effect.

Happily though, some of the newer cameras, like the BMCC, Alexa, and even the Canon C100 & C300 have a nice bit of headroom available that’s not present directly in cameras like the 5D’s video mode, so more of these wonderful filmic effects can be accurately simulated on these cameras.

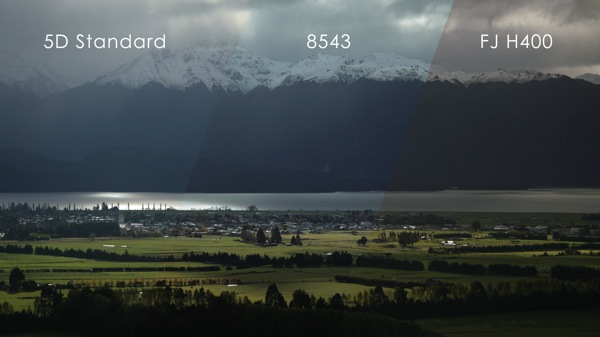

(left to right) Canon 5D untouched, then processed through filmConvert to emulate Fuji’s 8543 motion picture stock and Fuji’s Nexia H400 stills stock.

Art: Are there any other non-obvious aspects of the film look that you exploit?

Lance: For the most part we’re aiming more towards the color effects in our emulation model, so things like halation and aberration effects and differences in resolving power due to grain growth and diffraction limiting are not being addressing specifically.

We’re actually concerned that this would be prohibitively expensive from a computational standpoint. We started down this road with the grain model and quickly got bogged down in an unworkably expensive computation that, although physically accurate, was just too expensive. So for some of these secondary spatial effects we’ve simplified the model considerably in the interest of efficiency.

Will we add them in the future? I would place a pretty strong bet that upcoming versions may well include more of these wonderful filmic effects.

Art: How do you emulate film grain, and how important do you think that is to the film look?

Lance: I think grain emulation is massively important to the film model, yet at the same time it’s one of the most misunderstood aspects of our film model. We’ve noticed a bunch of folks talking about film grain and it seems that it’s a matter of expectation, but the problem is that most people don’t see actual film grain in an actual picture anymore, so they’re not quite sure what it really looks like.

We started where I like to start, by measuring the real world. We examined actual bits of film using a high resolution scanner. With this we could look at things like structure and the amount of grain across various exposures and colours. We initially started out thinking that we could actually simulate grain with a sort of physical model by building grain algorithmically, but this sort of brute-force physical model was hugely expensive.

At some point we decided to use the grain scans we had to provide the density structures, sort of the seed for the underlying grain morphology, and combine that with a density profile that includes density shifts in the underlying RGB data that matches what real grain does. And not only did this work amazingly well, but it re-measures in precisely the same way a piece of film does which is hugely exciting.

Part of the fun of building FilmConvert has been coming up with these vast simplifications to a model: finding ways of collapsing complicated effects into elegant data structures that are orders of magnitude more efficient, yet still manage to retain the complexity and accuracy of the original.

We couldn’t have done a film emulator without grain. It’s crucial.

Art: How hard is it to build the same look for different cameras? Do you find that different cameras have dramatically different looks?

Lance: In the beginning, collecting and building profile data was quite difficult and ate a lot of our resources. Happily, though, as we began to learn more about the hangups that cameras have, the process has gotten significantly smoother and quicker. Really the difficulty comes down to making sure that you know what a camera is actually doing: for example, does a camera have some sort of automatic knee that’s going to confuse our profiler? This really just requires a good read of the manual. Bizarrely, as a result of FilmConvert, I’ve also become an expert in the operation of a ton of cameras.

Speaking of cameras, it’s also been a bit eye opening just how different manufacturers are with respect to how they approach both the colour balance of a camera as well as the colour processing. What I found really interesting is how various cameras process colours in their own 3D space. I’ve found some cameras whose colours change dramaticallly throughout the exposure range in really odd ways. Happily we’re able to unwind this signal processing so that we can get a great consistent film look.

We’ve also been occasionally flummoxed when the exact same camera changes its colour science when we do things like upgrade its firmware, which took us a bit by surprise, but now that we know that manufacturers are likely to tinker in these ways we can keep an eye on them.

Once we have the data from the camera itself, building the model is actually fairly straightforward now — the first time we did it it definitely wasn’t, but we’ve gotten pretty good at it now.

(left to right) Arri Alexa log footage processed through filmConvert to emulate Fuji’s SuperX 400 stills stock and Kodak’s 5207 motion picture stock.

Art: In broad strokes, how would you describe the looks of the different cameras that you profile?

Lance: All over the place! Well, it’s not really as bad as all that, but there are actually fairly significant differences from manufacturer to manufacturer and even within the same camera family.

Before doing FilmConvert I was already familiar with a few different electronic cinema cameras from my work in VFX, but these were more of the big-brick high-budget cinema type cameras, the Panavision Genesis and the like. Moving down and evaluating cameras like the Canon 5D came as an enormous surprise: here we’ve got cameras ordinary filmmakers can afford to buy that are similar in performance to things that used to cost $250-500K. You have to work within the limitations of the less expensive cameras a bit, but compared to the stuff I used in art school (tube cameras, with only two tubes) these cameras are magnificent!

As to the look, well, that’s quite hard to quantify. Camera like RED and Alexa are meant to be massaged quite a lot in post, so although they have a distinctive out of the box look I don’t think I would describe them as having a look that you’d ever want to keep intact in its default state. That being said, the Alexa has a fairly nice out of the box balance, although it’s default end-to-end gamma is a little less than, say, a Canon 5D, and it’s skin tones go a bit pinkish. But really you’d want to capture Alexa or RED footage with a great exposure and look on set, followed by a gentle massage in post.

So, I think this is a trick question! There are no broad strokes that you can really talk about. They all have different looks, and without exception they can all be pushed around in camera or, if you’re lucky, during extraction in post. The filmmaker’s challenge is to note the differences, keep in mind the dynamic range you’re limited to, and work with them to achieve a creative aim. And then use filmConvert to make them all look a million times better, and like film, of course. But I may be a bit biased on this last thing.

Art: What challenges have you had to deal with in the process?

Lance: Bizarrely, the non-obvious problem that we faced was camera selection. We started out looking just at the RED cameras, mainly because we have some RED cameras in house; then an Arri Alexa popped itself magically into our office, so they were obvious things to take a look at. And we were always keen on the Canon 5D, as it really started this wonderful “large sensors for the masses” movement. But from there the waters became quite a bit murkier. Which cameras are popular? Which cameras will be popular? What are people really shooting on? This was actually a scary question to answer: get it wrong, and we go under and all have to get real jobs again.

(left to right) Canon 5D footage processed through filmConvert to emulate Fuji’s 8563 motion picture stock and Kodak’s Portra 400 stills stock.

Art: If your software could change or advance the state of filmmaking, what would that look like?

Lance: Promoting great and simple tools for filmmakers.

Really that’s what we’re after: small gizmos that can help filmmakers and artists of all levels achieve their vision. If we’re not helping that vision then what’s the point? That technology has gotten so inexpensive that filmmakers can access these sophisticated sensors and techniques is fantastic, and if we can push this beautiful trend along just a little further then I think we’ve won.

Art: There may come a time when a generation of filmmakers doesn’t grow up with the “film look” that current generations have. What would you say to them about the film look?

Lance: I’ve actually thought about this for some time. It’s an obvious thing that styles and aesthetics change over time. This isn’t a bad thing; for example, I don’t suspect folks would be keen on using the aesthetic of the early silent film era on todays’ stories. But I’d also tell folks to find a real print every now and then. It’s a strange thing to watch a real print after years of digital-only screenings, but it’s also a wonderful experience. I can’t quite describe it, but for serious storytellers it’s definitely something that needs to be seen first hand.

So while we’re looking backwards to some degree, it’s with the hope that people looking forward can see the inherent meaningfulness of this great 100 year old technology and it’s ability to help pass an emotion to the audience. And there does seem to be a resonance here, where some filmmakers just get the idea right out of the box, and that’s wonderfully exciting for us to be a part of.

You can find out more about filmConvert here. Thanks are due to Lance Lones for taking time out of his crazy schedule to answer my prying questions so thoughtfully and thoroughly.

Disclosure: Rubber Monkey provided me with a free copy of filmConvert for evaluation purposes.

About the Author

Director of photography Art Adams knew he wanted to look through cameras for a living at the age of 12. After spending his teenage years shooting short films on 8mm film he ventured to Los Angeles where he earned a degree in film production and then worked on feature films, TV series, commercials and music videos as a camera assistant, operator, and DP.

Art now lives in his native San Francisco Bay Area where he shoots commercials, visual effects, virals, web banners, mobile, interactive and special venue projects. He is a regular consultant to, and trainer for, DSC Labs, and has periodically consulted for Sony, Arri, Element Labs, PRG, Aastro and Cineo Lighting. His writing has appeared in HD Video Pro, American Cinematographer, Australian Cinematographer, Camera Operator Magazine and ProVideo Coalition. He is a current member of SMPTE and the International Cinematographers Guild, and a past active member of the SOC.

Art Adams

Director of Photography

www.artadamsdp.com

Twitter: @artadams