The HPA Tech Retreat is an annual gathering where film and TV industry folks discuss all manner of technical and business issues. It’s the sort of conference where the chap next to you is cutting shows on his MacBook Air; today he was alternating between Avid and FCP.

On this last day, HDR and color coding and reproduction get examined; we get updates from SMPTE and NABA, and John Watkinson returns to give static resolution another good thrashing. Plus, Mark Schubin’s post-retreat treat! As always, I’m taking notes and the occasional photo during the sessions, and posting them. It’s a poor substitute for being there yourself, but it’s better than nothing…

All the images can be clicked to make ‘em larger. My added comments are in [square brackets].

SMPTE Update – Peter Symes, SMPTE

There’s a lot happening; standards process improving: better, faster, trying to eliminate process delays.

Images: more & faster pixels: PQ EOTF, wide gamut, metadata. UHDTV ecosystem study group https://smpte.org/uhdtv-report

Sound: immersive audio, measurement techniques for theaters, new tech for theater calibration.

Metadata: SMPTE was first, and it shows. New approaches to integration, submission/approval, access.

Connectivity: MPEG over IP (7 documents; multipath), SDI (extending 3G to 6G and 12G, even thoughts of 24G), fiber interfaces.

JT-NM: Joint Task Force on Professional Networked Streaming Media. EBU, SMPTE, VSF. May replace SDI. >200 members. https://www.smpte.org/jt-nm

Control: in-service A/V sync documents in ballot. BXF 3.0 published, 4.0 in the works. Media Device Control over IP.

Workflows: Interoperable Master Format IMF, Archive Exchange Format AXF.

JTFFFMI: Joint Task Force on File Formats and Media Interoperability. AD-IS/AAAA, AMWA, ANA, EBU, others.

Better communications: https://www.smpte.org/standards/meeting-reports quarterly reports. https://smpte.org/standards/newsletter to find out what’s going on.

SMPTE is more international; 2013 meetings in Honk Kong, San Jose, Munich, Atlanta; 2014 Niagara, Tokyo, Geneva, … New sections in the UK, India.

Education: monthly webinars, CCNA online training, regional seminars coming in 2015, section meetings, great conferences including ETIA at Stanford, June 17-18 2014 (registration now open) Entertainment Technology in the Internet Age.

SMPTE will be 100 years old in 2016. Reinvented itself several times, evolving again, and you can help. “More to come –watch this space!”

Q: Why throw away SDI and move to IP?

A: No reason to throw it away just because there’s something new. There’s a tendency to say, “because something new is coming, throw the old stuff away”, but as long as the old stuff still works and is cost-effective, it’s not SMPTE’s job to denigrate old tech.

NABA Report and Initiatives on File-Based Workflows and Watermarking –

Clyde Smith, Fox (Thomas Bause Mason, NBC, stuck in snow back east.)

Formerly North America Broadcaster’s Association, a member of the World Broadcasting Union. File Format Working Group: problems with file-based workflows, investigated challenges and conducted member studies. Said: life was easy with linear workflows, then we moved to file-based; everyone wrote own delivery spec with no particular standards.

Three findings: (1) Harmonization must begin internally at each organization and optimally spread across industry. (2) Standardize persistent identifiers – bind the ID directly to the essence (open binding working group) (3) All stakeholders should create standardized XML representation of the file delivery spec, use it to drive delivery, processing, & verification.

Joint effort needed for a widely supported solution. Have a foundation document, forming working groups; chair Chris Lennon. Please go to the website and enter your working requirements / use cases! Go to http://bit.ly/1hc9jUF Survey ends in March; data analysis and draft report in April (we hope). PLEASE enter your use cases!

Q: Are you looking for an XML sidecar file with the delivery spec?

A: The ambiguity of the paper specifications: the level of detail is totally insufficient. 70% of Apple’s rejections are metadata-related. Yes, a manifest-style approach, generated by a machine and communicated to another machine, is the way to go. The DCI DCP spec is very useful as an example. All this ambiguity adds no value, only leads to failure, we need to come together as an industry to solve this. [applause]

Q: What about compatibility with SDI / ASF based transport?

A: No good bridge, no mapping between the two. Trying to solve the contribution problems first, then the distribution problems.

MovieLabs Proposals for Standardizing Higher Dynamic Range and Wider Color Gamut for Consumer Delivery

Program notes: “Last year, MovieLabs with its member studios developed the MovieLabs Specification for Next Generation Video for high dynamic range and full color gamut video distribution. This panel discusses some of the technologies that need to be standardized to realize the specification, including an HDR EOTF, mastering display metadata, and color differencing for XYZ. We also consider how other standards could build on these to address mastering workflows, additional consumer distribution use cases, and baseband video carriage.”

Moderator: Jim Helman, MovieLabs

Scott Miller, Dolby

Arjun Ramamurthy, Fox

Stefan Luka, Disney

Howard Lukk, Disney

JH: we work on digital distribution. 7 years ago studios asked us to work on common formats for home distribution. Lots more formats are relevant today. Previous generations looked at video standards, adopted them; now the studios want “more then just 4K”, want wide color gamut (WCG), HDR, enable innovation that wasn’t possible before. Worked out a high-level spec. Next-gen spec on http://movielabs.com/ngvideo

Future proofing and preserving creative intent. Things like Rec.709 constrained choices, hence TV settings like the “vivid” option, yuck. Solution: large, future-proof container: 10,000 nit peak luminance, perceptual EOTF (Electro-Optical Transfer Function, how to turn code words into visible light) + full XYZ gamut, plus metadata on color volume used.

Started from BT.2020 UHDTV. Added HD, 4K, 48fps, higher frame rates; no fractional rates [applause], HDR, 12-bit minimum (adds 14- and 16-bits, 4:2:0, 4:2:2, 4:4:4), Mastering Display Metadata (MDM). Also, HDR not tied to X’Y’Z’: EOTF + Mastering Metadata usable with BT.2020 or BT.709 primaries.

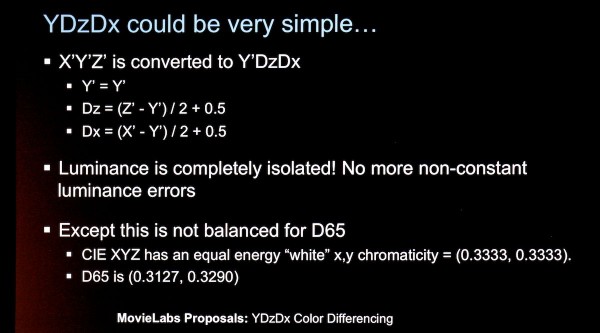

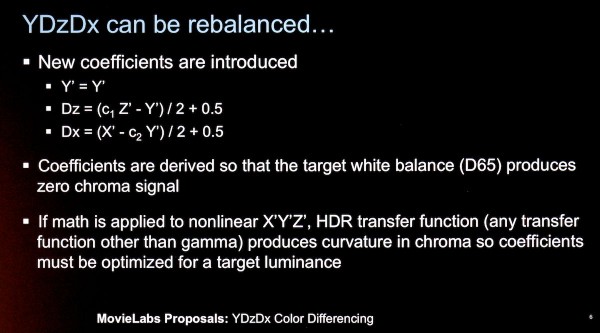

HDR EOTF, MDM, Color difference for XYZ (YDzDx for color subsampling), more.

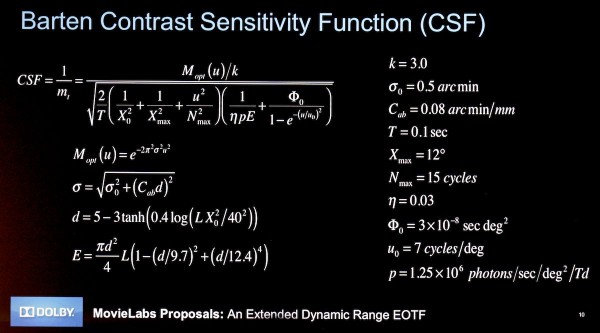

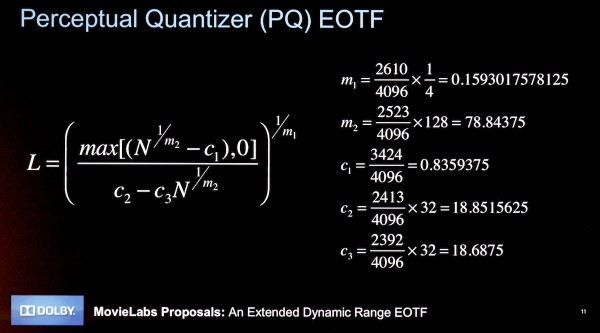

Scott: Extended Dynamic Range EOTF. Critical, because it defines what you see. Why a new EOTF? Should match HVS (human visual system), but current EOTF based on CRTs. Current gamma finally defined two years ago (BT.1886); works well enough for CRTs but doesn’t handle high brightness. New EOTF 0-10,000 nit range, assume 12-bit max, use HVS to determine. [same Dolby slides from yesterday regarding PQ], get the Barten function:

or a more simplified version:

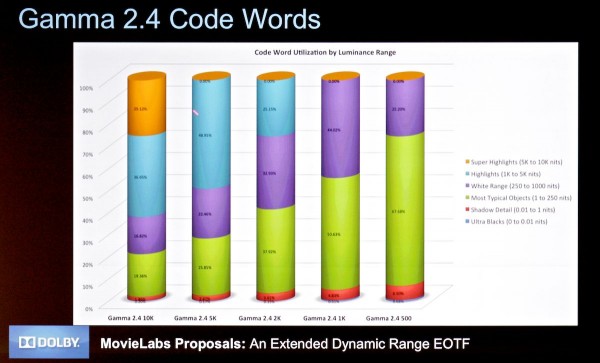

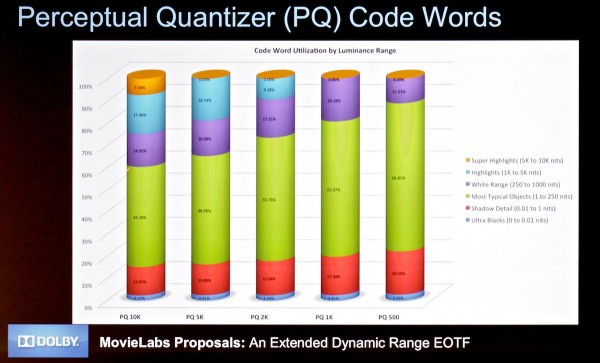

Q: Doesn’t the 10K nit range waste a lot of code words? A: No, from 5K to 10K nits is only 7% of the code words. Midpoint of the PQ range is 93 nits. PQ handles the range much better than gamma:

Conclusion: we need a new EOTF, gamma just doesn’t cut it. PQ works well with 12 bits, each code value is just under a perceptual step, handles the very useful 10K nit brightness level. Many possible use cases, the HDR replacement for gamma. SMPTE 10E committee working on perceptual EOTF, likely starting HDR / WCG task force too.

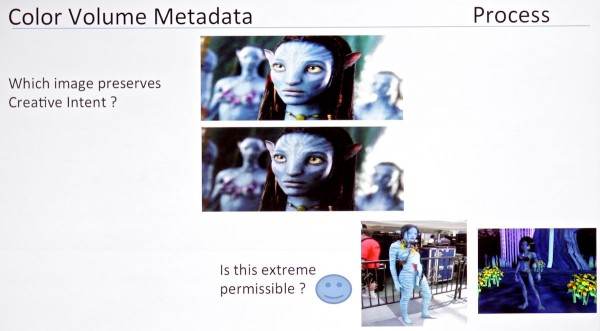

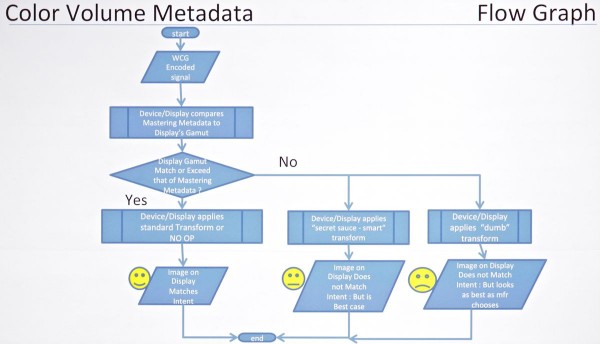

Arjun: Mastering Display Color Volume Metadata. What’s the problem we’re trying to solve? Preserving creative intent. You know the CIE color space diagram, handles color but not brightness levels; hence the need for color volumes [see Dolby color volume preso from yesterday]. Problem: nothing about the color volume from the mastering display is conveyed downstream; fine if all displays are 100 nits, 601 or 709 color space. But when displays vary:

Required metadata: RGB primaries, white point, max/min display luminances in cd/m^2 (nits)

SMPTE drafting group working on a standard. Need to define transport mechanisms: MPEG SEI Messaging, XML sidecar, within essence wrapper, separate delivery channel.

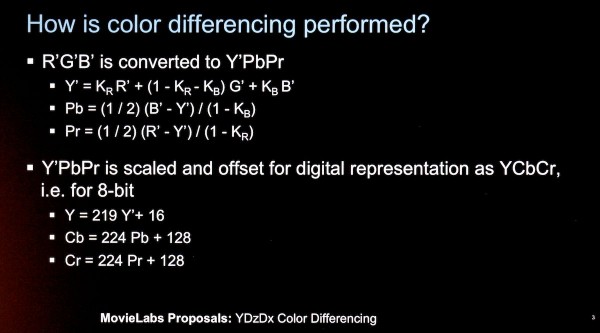

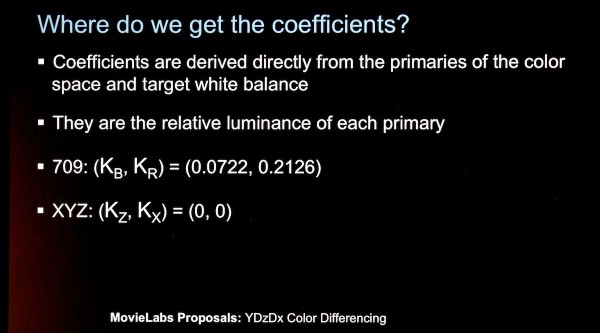

Stefan: Color differencing: optimize for characteristics of HVS, we see more detail in luma than chroma, so chroma can be subsampled and/or compressed more heavily.

How it’s done:

This works great with a linear signal, or even gamma-corrected, not so good for log or PQ-encoded signals.

Impact on compression: wider color space means bigger visual steps. X & Z don’t point in same directions as B and R. Not oriented same was as in YCbCr. Need to tweak coefficients. Color differencing paired with subsampling can lead to color errors due to interpolation; MDM can be utilized to reduce this.

Color differencing can be done on X’Y’Z; similar to R’G’B with some additional work.

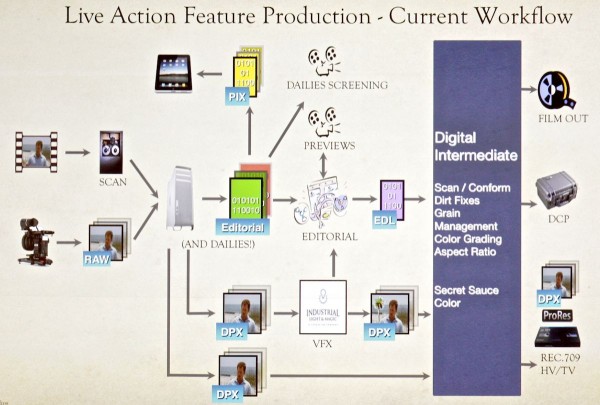

Howard: HDR Workflow for Motion Pictures. “if you weren’t afraid before, be afraid now!” Current workflow: shoot film & scan or shoot raw, send the files ‘round for work:

Future: prepare for a 16-bit pipeline, not 10-bit; you’ll need it. Also ACES. DPX becomes OpenEXR [all the DPX items in the center of the previous diagram turn into EXR items, and the final, bottom-right distribution step gets replaced by IMF files].

We’re dying for a Rec.2020 monitor, both for grading and for editorial. We have the problem that people grade on 709, then they get a 10K nit monitor and just turn the brightness up, which is wrong way to go.

Jim: Compressed carriage: codecs don’t care too much about EOTF and color space. HEVC works for 12-bit HDR at high bit rates. Proposed HEVC additions: Main 12 profile including 4:2:0, Extend VUI metadata for HDR EOTF, XYZ, YDxDz. Baseband (CEA, HDMI, MHL): need signaling for HDR EOTY, XYZ, Y’DxDz, MDM. Also, 48fps not supported yet. Lots of standards work in progress; SMPTE TC10E HDR/WCG Study Group, MPEG HDR/WCG Ad-Hoc Group. Still a lot of work to be done!

Q: When we perform operations with multiple gamma-corrected pixels, we cheat. Much better to go back to linear. Assume you’re not contemplating 1400-bit linear space for this?

A: Something to think about, though you can do quite a bit in the PQ domain. It’s a cheat, but it’s roughly perceptual, so you get similar types of results to using gamma-corrected values.

Q: How do we actually get 10K nits?

A: 10K nits is not scary, we see it all the time, like the surface of a fluorescent tube.

Q: XYZ, departing from D65, nonlinear transform function. Achromatic signals are not equal, very uncomfortable for video engineers when a zero value of chroma can show color!

A: Yes, need to take the EOTF out of the signal to avoid color errors; so do things like processing in the linear domain.

Q: Why rebalance back to D65? So archaic. Why not P3 white points?

A: Digital cinema different from home delivery requirements. In the home, there may be image mixing beyond our control [titles & graphics overlaid by the broadcaster?], need to use common white point.

Q: Given all the issues with color difference, why bother?

A: We’d love to get to 4:4:4, but there are bandwidth limits, e.g., 4K or 4:4:4 on HDMI, but you can’t do both.

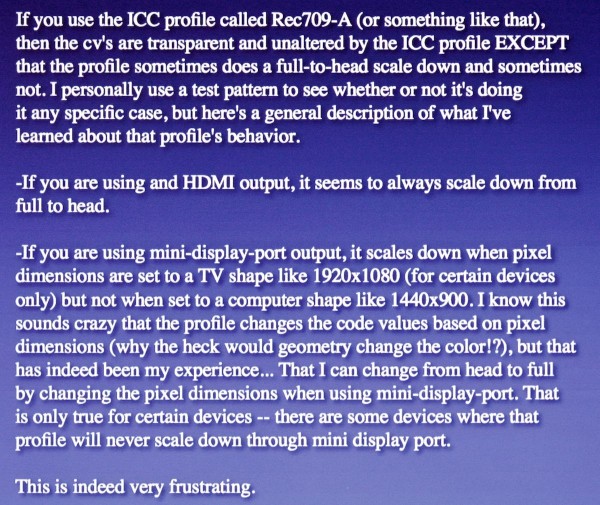

What’s Illegal Color/Colour? – Josh Pines, Technicolor and Charles Poynton

Josh: We have a problem: Quicktimes and ProReses and suchlike used all over, DNxHD for Avid, digital files with codecs: it’s a coin toss whether the data will be in full range or legal range, it’s a 50/50 chance. There’s often a person at facilities whose full-time job is checking for full vs legal range! A plea for some entity somewhere to come up with a standard, otherwise let’s jettison Quicktimes as a reference since there’s absolutely no way to control them!

Charles: Legalizers should be outlawed. Common practice for QC houses to reject any material with super-blacks or super-whites. Why do we do it? Do we write a doc to the CE people saying, BT.1886 says ride the 2.4 curve through 940 (reference white) all the way up through 1019 without clipping (and down below reference black, too). My assertion is this: (a) in the reserve region at the bottom, make it go blacker, that’s HDR. (b) in the reserve region at the top, make it go whiter: HDR specular highlights. Extra headroom in Cb, Cr: use for wider gamut. Just turn off your clippers and gamut alarms, use existing YCbCr number space with wider range data.

Digital Imaging with LEDs: More than Meets the Eye – Mike Wagner, ARRI

We at ARRI are already beyond 4K:

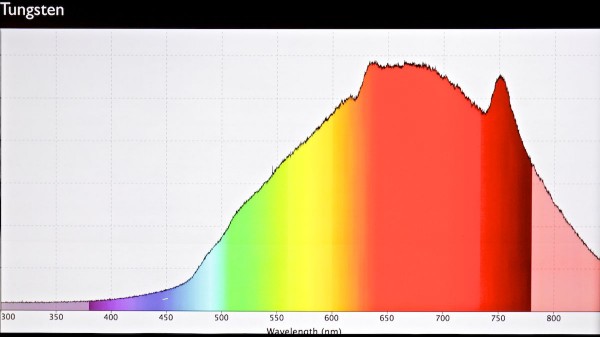

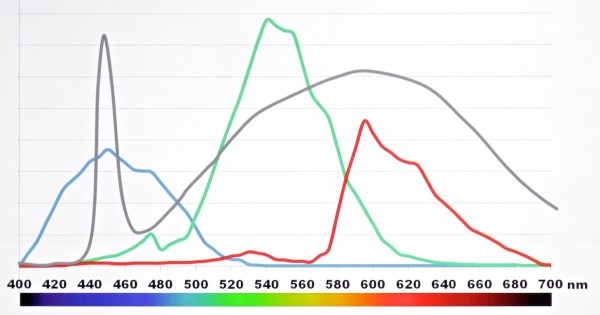

Creating white light: Tungsten is pretty much a continuous curve:

This spectrum was created using a visible-light spectrometer, so you don’t see all the heat & IR.

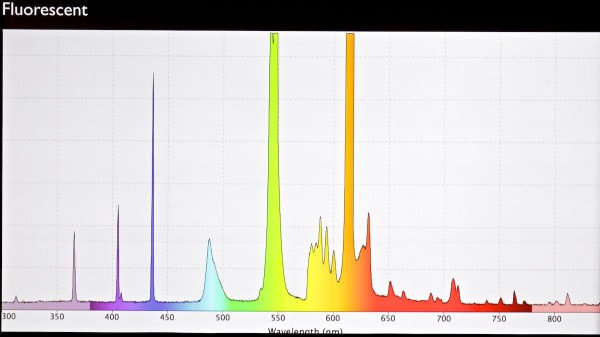

Fluorescent is still a white light [practical demo; he has several light sources lined up on a stand],

Fluorescent is a bit spiky:

…created by UV light hitting phosphors [demos using UV lights and rocks, as well as showing slides]

They grind up all these rocks and make ‘em into powders [demo of bags of white powders that glow R, G, B under UV light]. Vendors mix these powders in their own recipes to get white or whatever color they want. Spiky, peaks and valleys, discontinuous source.

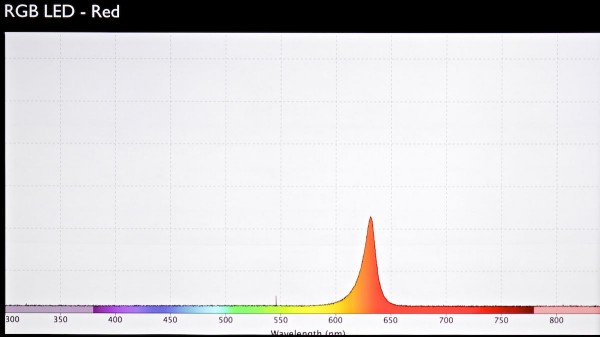

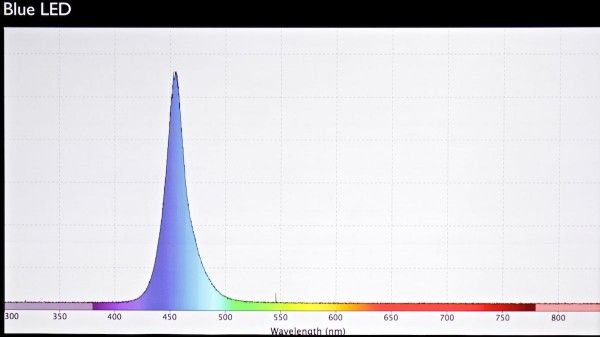

Now as we move forward, simple LED bulb:

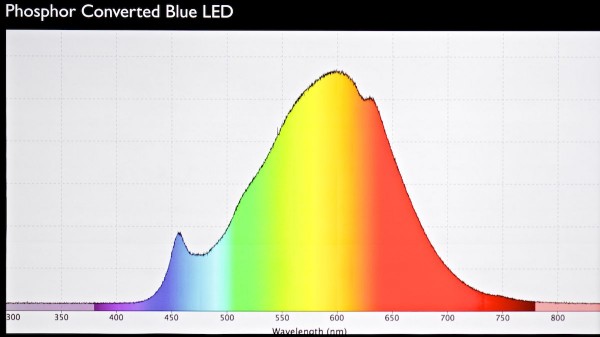

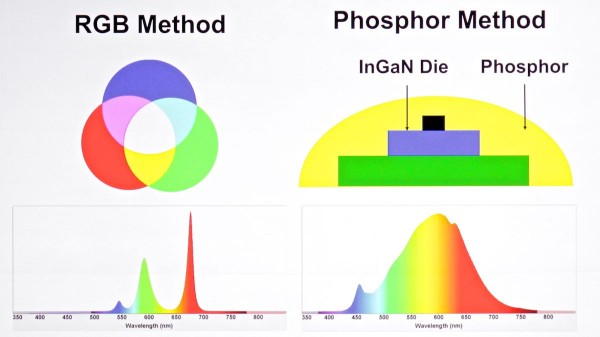

Phosphor domes atop bright blue LEDs add colors:

There is no actual white LED; they use blue LEDs with phosphors added. Remote phosphors, or adding a dome of phosphor or a paint layer right on the LED. There are arguments for both ways of doing it.

You can also add more LED colors to the mix, with 7 or even 9 colors.

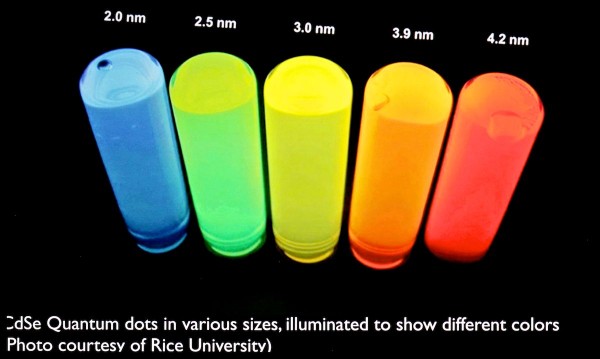

Quantum dots: depending on particle size, get different wavelengths:

Not ready yet for lighting tech, but very interesting, promising.

Color metamerics: [demo with 7-color LED light with programmable color changes, showing various “white” settings, ranging from 2830K – 2980K with varying +/- green CC values. Vibrant fabric, with greens and oranges: the fabric changed dramatically as the ”white” light changed! Different settings mixed white and amber; red and cyan; red, green, and blue; red, green, indigo; amber, cyan and indigo; red, green, blue, and white; and all 7 colors mixed.]

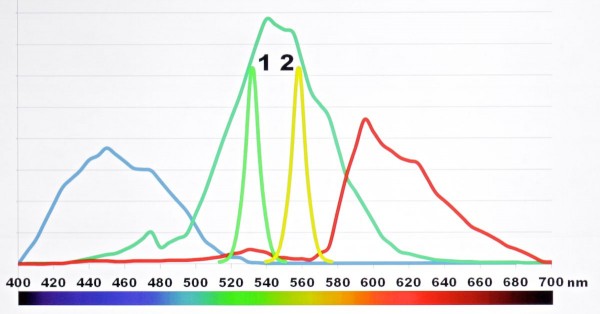

HVS sensitivity vs camera response: camera sensors have photosites that aren’t color-sensitive, use color filter arrays to capture color. Color filters picked to somewhat match HVS, without too much color overlap. Look at a blue LED compared to camera sensitivity:

[blue LED spectrum in gray]A gelled tungsten light is still a broadband source. Colored LEDs aren’t broadband, they’re spiky, and look at how a camera would see ‘em:

[These might be visually distinct to us, but the camera shown might see them as almost the same color.]

Not only do the lights emit different spectra, the cameras have different spectral sensitivities. [demo of various scenes lit by different LED sources, comparing colors.]

How do we quantify these differences? CRI, Color Rendering Index. It’s easy to fool; it uses only 8 – 14 specific reference colors, summing response. CQS (Color Quality Scale) uses 15 colors, root-mean-square values. TLCI (Television Lighting Consistency Index) looks at camera responses. PLASA working on standards.

Confusing? Yes. There’s so much more than meets the eye.

Spectral Color as an Alternative to CIE 1931 – Gary Demos, Image Essence LLC

An update on a five-year-old preso at HPA, minimizing color variation. A puzzle requiring attention: in any imaging workflow, what is the applicable spectral map at each point?

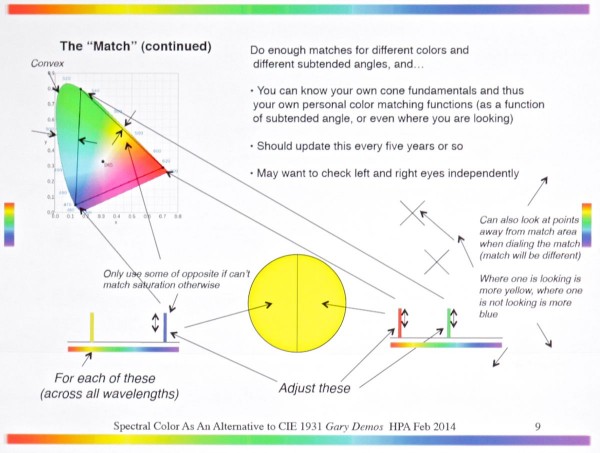

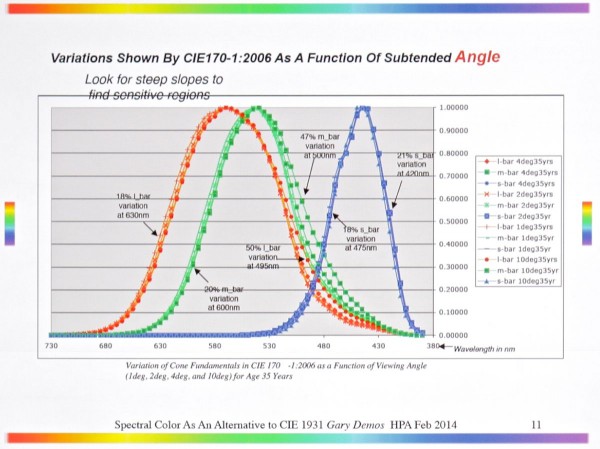

CIE 1931 is about how we see, but they didn’t understand how we see color, differences depending on whether something is large or small in the field of view. Only 17 test observers. In 1959, tested 49 observers, built 1964 standard observer. When we see the CIE “horseshoe” spectral maps, the chromaticity [Gary was going a mile a minute, both with his slides and his talk, and I simply fell behind and “lost sync”; I couldn’t keep up. What follows is a very fragmentary rendering of what he discussed. Apologies.]

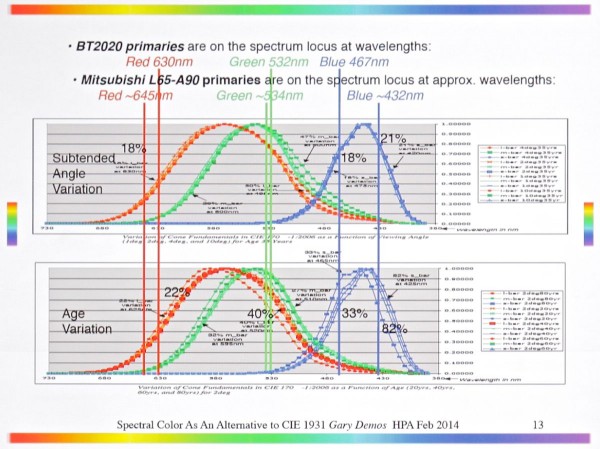

BT.2020 sits right on the Spectrum Locus.

Color matching:

Attempt to make color matching up to date; parametric by viewing angle:

Also parametric by age. When we put VT.2020 primaries on these curves:

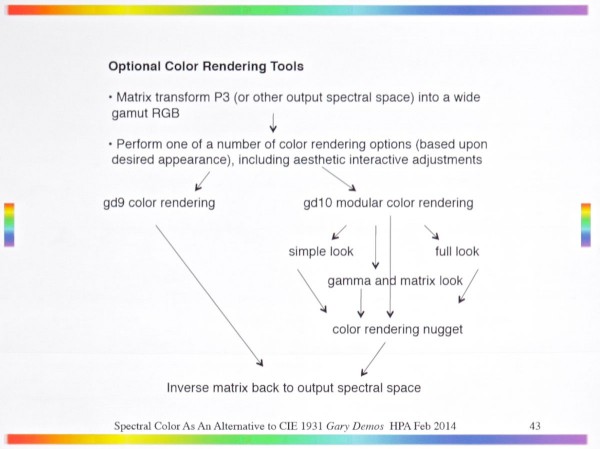

…they fall in really bad places. When you’re … there’s a yellow spot on the back of the eye, so where colors hit the retina changes how they look (yellow mostly in the focal point, so blue in the margins) … a lot of different sensing spectra [from the Canon 5D Thompson Viper, others]… LED spectra … If you have more than three primaries, get something with broad spectrums. Very tricky to calibrate with narrow spikes. Sharp Quattron (RGB+Y), RGB+White… IR and UV, Radar, thermal noise, shot noise, fixed-pattern noise… Vision confounds! … Display issues… Color appearance… Confounds from cameras; the red sensing spectrum is pushed further out than the cone fundamentals, increases color distinction … We care what happens in the mastering suite, what we want is not the chromaticity of the mastering suite, we want the emission spectra, then we can model what the creative person was seeing.

Apply spectral color at presentation. That’s what’s happening with the Sharp Quattron. Personal info: how old are your eyes, how close are you, ambient surround, you could tune the emission to your viewing particulars… bridging the gap… spectral sensing is separated in the cortex… Models this, slides the red curve down and the green curve up (in frequency)…

Knowing the spectral functions of the camera, map onto cone fundamentals, know the emission spectra of the RGB display, had raw data from Viper camera and ARRI Alexa and 5D Mk II,… How does E [the equal energy point]process though the system? Camera sensing not linearly transformable into cone fundamentals,… the more we know about spectral sensitivities, not a one-size-fits-all like CIE 1931, the better … not technically hard, but it does require stretching your brain …

I think the errors are being cut down by a factor of three. What do we know? Cameras, we know the spectral sensing function, in mastering we know the emissions of the display, we should get the cone fundamentals of the mastering (viewer age, screen size), for the viewer we know about display and cone fundamentals and surround. Can use all of these to improve rendering.

Q: Might we also want to capture surround lighting in grading suite?

A: Yes.

Q: How big a deal is it to replicate the CIE tests? Could do we do it at HPA ’15 and get hundreds of observers?

A: Good idea! An excellent thing to do.

Q: You suggested keeping a record of the observer. If you have multiple creatives in grading room, who do you record?

A: Do you want to see the movie as Seven Spielberg or Lou Levinson saw it? An interesting puzzle. I least want to see what’s emitted, so I know what they were looking at. Step 2 is getting their information. Do I know if they’re long or short or shifted 4 nanometers?

Dynamic Resolution: Why Static Resolution Is Not a Meaningful Metric for Motion Pictures – John Watkinson

One or two physical characteristics I don’t share with other people, like the length of my hair. Some think it’s for political reasons, and they ask. I say I’m a latent Marxist-Schubinist!

One thing got my attention, that extremely bright Dolby display [4000 nit display in demo room]. It’s abundantly clear that you can’t show 24Hz material on it [applause]. I liked how it showed every camera defect. 24Hz is dead and it took an audio engineer to kill it! [applause]

The other cool thing was the Tessive MTF measurement. He tested a lot of 4K cameras, none had 4K resolution. And that was on a static picture, because that’s all you can test. All we have now is a series of stills, and it’s not the same as true motion pictures.

The 24Hz we use has nothing to do with psycho-optics, not does 50Hz or 60Hz. You could argue that 24Hz was optimized to sell popcorn, 50/60Hz to sell soap: it makes as much sense.

Imaging hasn’t embraced potential of digital tech. Fractional frame rates are hilarious: what subcarrier? What crosstalk? That’s what you should be laughing at.

DTV is simply analog TV with all the mistakes described as a series of numbers. And it’s the same with digital cinema. Same old stuff with a bunch of numbers, and that’s missing a few tricks.

We still use frame-based moving pictures because that’s all that was possible with film. With engines, we just kept adding pistons: the B36 had 6 engines with 28 cylinders each… until the jet engine came along. Jets are continuous flow, not discrete explosions. We’re still in the discrete-explosion stage of motion pictures, we need to move to continuous flow.

We measure static resolution as we did on photography, made sense for stills because stills don’t move. Also it’s all we can measure, whether it’s relevant or not. I question whether static res is meaningful for motion pictures, like measuring a Ferrari by the performance of its parking brake. The flaw in static resolution is in eye tracking, a.k.a. smooth pursuit. Bandwidth of vision isn’t very high; we can’t perceive rapid changes in brightness, but motion creates rapid changes. so we eye-track to make moving things stand still visually. What frame rate do you need for a stationary image? Zero? For the parts that the eye is tracking, frame rate zero is adequate. But in the real world, the rest of the field of view is blurred, because it’s moving over the retina. Whatever you’re not tracking will be blurred smoothly [in the real world]. Doesn’t happen in a frame-based imaging system: the background will appear as a series of discrete objects, the background gets presented in a series of jumps: background strobing. What frame rate do we need to eliminate it? Infinity? Doubling from 24fps to 48fps, we only halve what’s unacceptable. You need the higher frame rate to portray what you’re not looking at, to prevent juddering. Traditionally, we blur the background with soft focus. So why are we raising pixel count when we use out-of-focus to conceal temporal artifacts? It doesn’t make any sense. Once you start to study the physics, you see a lot of things that don’t make sense. You look through history, and you can’t find any answers; no fundamental reasons to do it. No justification to retain that stuff. If there is no correct frame rate, then why have a frame rate at all? Why not have films that look just like the real world so you could go see a “movie” instead of a “flick”, or a “judder” [applause]. The correct metris for resolution is dynamic resolution, When the eye moves, to pursue a subject, if that subject is onscreen for nonzero time, you get blurring. You can calculate this, it’s sin x / x, it’s massive with even slow motion. If you lock off the camera and something moves, for the duration of the shutter time, you get blurring. These concatenate, capture blurring plus eye-tracked display blurring. You can compute how much res you have left in your 4K camera given these losses: you won’t get anywhere near 4K, not even near 1K, in most moving pictures.

Don’t get me wrong, I love 4K display, a brilliant idea. But it doesn’t need a 4K signal due to blurring. The compression people laugh at us for making their life easy, we’re blurring the image and taking all the high frequencies out of it, makes compression much easier.

We have compression tech optimized for frame-based media. What if we had an optimal system, and told the compressions to deal with it? MPEG4 already has Continuous Video Objects: moving objects with no frame rate; true object-based vectors with correct motion vectors [not based on finding similar macroblocks, but on actual motion]. These clever bits were eliminated in H.265; we threw away the bits we need for future imaging.

I don’t want to sound pessimistic. We have all these new tools, we should figure out what to do with them!

Q: the problem is that we’re doing massively sub-Nyquist sampling in the temporal domain. It seems that making the frame rate might higher would solve the problem.

A: No, you cannot filter in the temporal domain. You’re correct about the temporal aliases, but it’s not a problem because aliasing is only aliasing if you don’t know what band it belongs in. Moving pictures only work because we sample in the time domain, and the tracking eye applies a baseband filter.