The HPA Tech Retreat is an annual gathering where film and TV industry folks discuss all manner of technical and business issues. I’m taking notes during the sessions and posting them; it’s a very limited transcription of what’s going on, but it should at least give you the flavor of the discussions. This fourth day covered replacing SDI with Ethernet, “better pixels”, virtual post, “too much geek speak”, and more.

I indicate speakers where I can. Any comments of my own are in [square brackets].

Professional Networked Media

Program notes: “Broadcasters and other media professionals are considering moving their live media streams from SDI & AES to Ethernet to enhance flexibility of their plant, to simplify cabling, to tap economies of scale of the enterprise networking industry, and to ease a move to a multi-resolution future that may include UHDTV. Many challenges in this effort remain, but this panel includes people who have done real-world live networked-media projects.”

Moderator: Thomas Edwards, Fox

Al Kovalick, Media Systems Consulting

Jan Eveleens, Axon

Peter Chave, Cisco

Nicholas Pinks, BBC Research & Development

Eric Fankhauser, Evertz

Steven Lampen, Belden

Thomas Edwards: What I really want to do is have people talk about real-world developments, not what’s theoretical. How this will enable revolutionary change.

AK: It won’t be long until SDI is a legacy protocol. Migration patterns: analog to digital (SDI), then traditional IT started moving into traditional AV (Ethernet), now cloud-grade private/public infrastructure. Analog->digital->IT/cloud. Six tech trends. 1) Moore’s Law on chip density, 2x / 2 years. 2) Disc storage capacity growth, 60% / year. 3) Metcalf’s Law: value of network proportional to # of nodes squared. 4) Everything is virtualized; on-demand resources; scale & agility. 5) Marc Benioff (Salesforce.com): “Software is dead” (dead as an installable, alive as a service). Web apps, SaaS. 6) Ethernet celebrated its 40th anniversary last May. 400 Gb/sec in 2017. ~300 3G-SDI payloads will fit in a 1 TB link, in each direction.

Facility core will become an IT infrastructure. We’ll always have edge devices (lenses, switcher panels, displays) that are AV based, but everything else goes IT.

Packetized AV streaming? Lossless QoS, low delay. Mammoth data rates for HD, QHD, 4K, 8K. Point to multipoint routing, synchronized. Frame switched, too.

Three methods to packet switch AV streams: 1) source-timed (not as SDI, frame accurate; the sources switch port numbers at a given time, so the sources hand off the “active stream”). 2) Switched timed (switch sent command to cut during a VBI line, e.g., line 7) 3) Destination-timed: the receiver gets both source streams, does its own switch.

Path to interoperable all-IT facility. – Demand open systems, standardize SDI payload over L2/L3, etc.

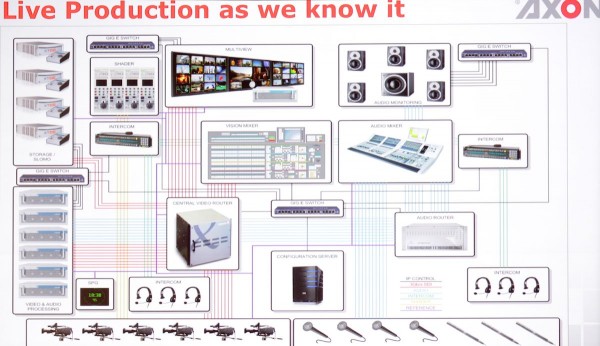

Jan Eveleens, Axon: Ethernet AVB. Production as we know it:

[click for larger image]Key challenges: lack of flexibility, difficult to introduce new standards. One cable per signal, and lots of ‘em. Typical example from a recent installation: 157 analog audio DAs, 78 digital audio DAs, 410 analog video DAs, 1018 SDI DAs. Many kilometers of cable.

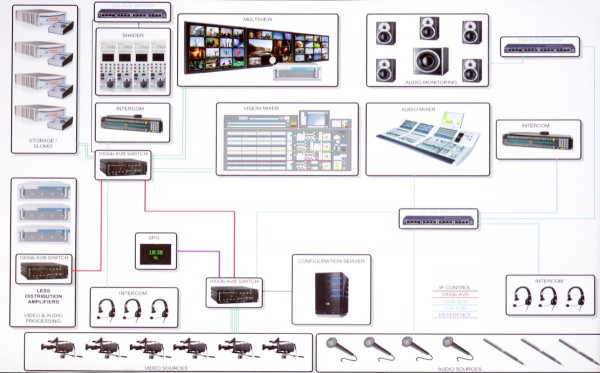

Why Ethernet? The speed is now available, and a 10Gig Ethernet port is already cheaper than an SDI port. 1 terabit link speed expected in next decade. Ethernet allows distributed architectures, bidirectional, multiple signals on the same cable. Here’s what it looks like:

[click for larger image]How to make Ethernet work for realtime apps? AVB (Audio Video Bridging). Time synchronization, bandwidth reservation, traffic shaping, configuration management. IEEE 802.1BA, 802.1AS, 802.1Qat, 802.1Qav, etc.

All nodes are fully synchronized. Low latency, 2 msec typical overall. Self-managed bandwidth reservation, multi-cast.

IEEE 1722 transport protocol: PCM audio, SDI video with embedded audio, raw video, time-sensitive data.

Interoperability: ensuring AVB nodes talk to other AVB nodes. AVnu Alliance working on it.

How real is it? 100mbps – 40Gbps switches shipping; audio processors, intercoms, consoles and speakers, etc.

Key benefits: open standards, one framework, proven tech, available now, interoperability, plug-and-play, fool-proof (self-managing), perfect co-existence with existing IP traffic. www.avnu.com

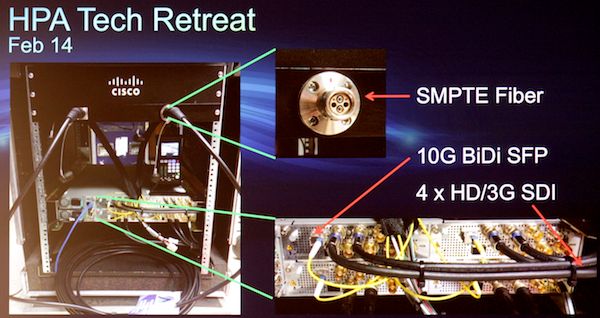

Peter Chave, Cisco: All-IP live production.

In 2002, GigE became a commodity, replaced ASI in many cable-industry applications.

NAB 2013 demo: two cameras converted to GigE, a couple of monitors, IP-based routing and storage, switcher control panel on an iPad. SMPTE 2013: 4Kp60 source as 4x 3G SDI, converted to 2x 10GigE, into Nexus 3K switch to 40G copper link, then converted back again to 4x 3G-SDI. November 2013, same thing with an F55 camera; also an EBU workshop demo, with 4Kp60 signal converted to IP, sent over 40G with extra traffic injected, showed with and without QoS (QoS works!). Here at HPA, 4K on either end, two DCM-G SDI-to-10GigE converters feeding 2x 10Gig fiber (SMPTE fiber camera cable).

VidTrans show later this month: mixing file-based with live data, routed through Layer 2 / Layer 3 VPN.

Nicholas Pinks, BBC: Future of broadcasting. Tony Hall, BBC’s Director General, has defined BBC’s future as IP. BBC on mobile, TV, on-demand, online.

How does IP help? Flexibility, sharing, global assets, future data centers, data models (stop talking about video, start talking about data). The importance of data: more formats, platforms; new types of content, more personalization, better interaction. What we did for Olympics should happen for everything, even local games.

Sources, flows, and grains: Source devices like a camera, creates a video flow, an audio flow, and an event flow (like start/stop markers, camera goes live, person walks into frame, etc.). Within a flow, a “grain”: a timestamp, a source ID, a flow ID, and a payload. Perhaps a singe video frame, or could be a single line, or a macroblock. So perhaps my F55 emits a raw picture, an AVC-I flow, an h.264 flow, and an events flow. Not just a concept, we’ve been doing it for two years [Glastonbury Music Festival coverage video clip]

Stagebox: media streaming device from BBC R&D, converts camera outputs to IP over a wireless link [?].

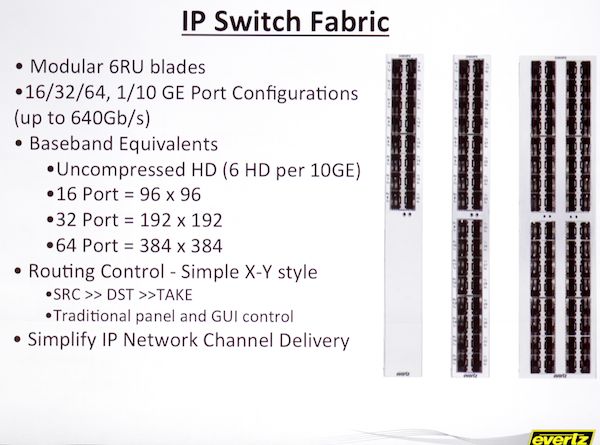

Eric Fankhauser, Evertz: Software Defined Video Networking (SDVN): Ethernet infrastructure, distributed routing, pooled resources, remote resources. Separate control plane from IPX switch fabric. You’re building a private cloud: production, transcoding, playout, etc. become services. Control must be seamless; functionality and reliability must be as good as baseband. Building blocks: IP Gateways, universal edge devices to translate audio/video to IP. Fwd error correction SMPTE 2022, Hitless switching SMPTE 2022-7. IP gateways that plug into video switches, also IP switch fabrics:

EXE-VSR video service router, 46 TB/sec. 2304 ports of 10GigE, deployed by ESPN.

High-density network address translation, used on edge to extend or link fabrics. Bulk encoders (JPEG2K), 10GE multi-viewers, encoders/transcoders h.264/MPEG-2/J2K, all under unified control.

MAGNUM is the control layer. Complete monitoring and control.

Applications: SDVN for transport, for production, for playout. 10GigE links throughout.

Summary: SDVN future-proof, scalable, high reliability, efficient workflows. Control is key: it must be simple.

Thomas Edwards, Fox: The problem: SDV flow rule updates take 1-10msec, a huge amount of time, can’t synchronously switch. RP168 timeframe is 10 microsec. Solution: add new flow rule to switch based on new header value, then wait 10ms. At switch time, source will set header to match the rule at RP168 switch point. After the switch, controller removes the old flow rule. Live demo in demo room on a COTS switch from Arista.

Steve Lampen, Belden: 4k single link: is coax dead? No standards for 4K single link. Everything here is theoretical. There is no 12G single link (yet; we’re close…)

We normally test to 3rd harmonic. But we can’t get an 18 GHz tester, so we’ll test to the 12 GHz clock. SMPTE 1993 formula: safe distance is until -20dB at 1/2 clock (Nyquist). Things have improved since then; -40dB at 1/2 clock. Our existing cable works to 6 GHz, our connectors, too. 12GHz requires new designs: add 20 years to improvements.

Various current coax cables at 12 GHz runs from 73 – 347 feet at -40dB @ 1/2 clock. Not good enough? You can go to fiber (but fiber isn’t easy). 227 feet for a very common cable (1694, I think).

Better Pixels: Best Bang for the Buck?

Program notes: “Like stereoscopic 3D before it, so-called “4K” spatial resolution has been at hot topic at professional and consumer trade shows. Higher frame rates have also been widely discussed. But is it possible that “better pixels” (higher dynamic range and color volume) might be better than either more spatial pixels or faster pixels? Or might some forms of “better pixels” actually cause problems on formerly low-brightness cinema screens?”

Moderator: Pat Griffis, Dolby

Higher Resolution, Higher Frame Rate, and Better Pixels in Context – Mark Schubin

Overview of Real-World Brightness – Pat Griffis, Dolby

Color Volume and Quantization Errors – Robin Atkins, Dolby

Cinema Motion Effects Based on Brightness and Dynamic Range – Matt Cowan, ETC

Image Dynamic Range in TV Applications – Masayuki Sugawara, NHK Research Labs

Better Pixels in VFX and Cinematography – Bill Taylor ASC, VES

Mark Schubin: Single-camera 4K: only have to worry about lens MTF and storage speed/capacity. Can reframe, reposition, stabilize, easier filtering. Earlier cameras: DoD, Lockheed-Martin 3-CCD (12 Mpixel each), Dalsa, RED. 2013 top box-office: mostly ARRI Alexa, 2880×160, not even 3K. Live theater transmission of 4K… in UK. EBU shows that 4K’s difference is perceivable on 56” screen at Lechner distance. They also found that doubling frame rate is more visible. HDR? Can increase detail: boosts contrast, makes it look sharper. Has huge impact. What data rate increase for HDR? To avoid contouring (visible quantization), need more bits. At SMPTE, we asked, do we want more or faster or better pixels? Answer: faster pixels and better pixels are more desirable than more pixels.

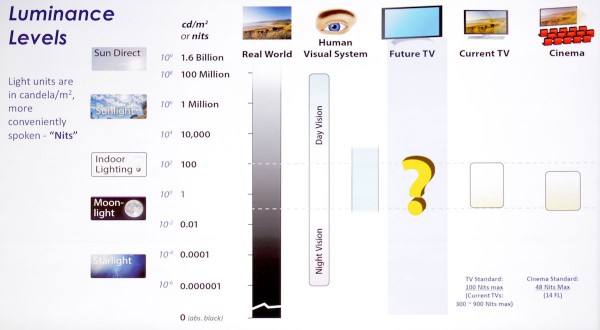

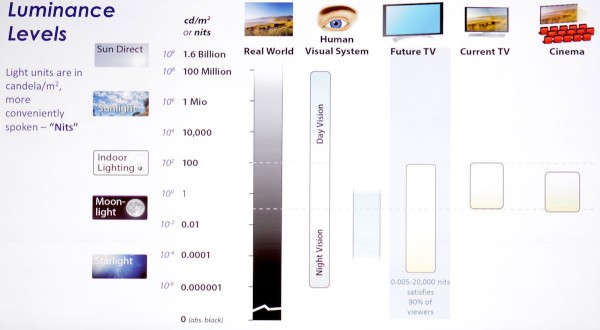

Pat Griffis: Next-gen imaging is a function of more, faster, better pixels (4K+, HFR, HDR and expanded color). Range of brightness we deal with in real world: TV standard100 nits (1 nit = 1 candela / square meter, 4 nits = 1 foot-lambert) (current TVs may get to 300-900 nits), sunlight 1.6 billion nits, etc. adaptation gives 5-6 orders of magnitude. What do we want for new TVs?

[Click for larger image]

We did an experiment at Dolby, focusing a cine projector down to a 21” display, and let users adjust the brightness, to see what sort of brightness level people like on displays.

Typical brightness levels in the real world:

we found we wanted a TV with more range:

[Click for larger image]

Cameras today can capture a wide DR, wide color, but these get squeezed down in post, mastering, and distribution.

We find that human prefs are for 200X brightness, 4000x contrast compared to current displays. Tech limits of film, CRT, LCD are not relevant any more. Best balance of more, faster, and better is the ideal.

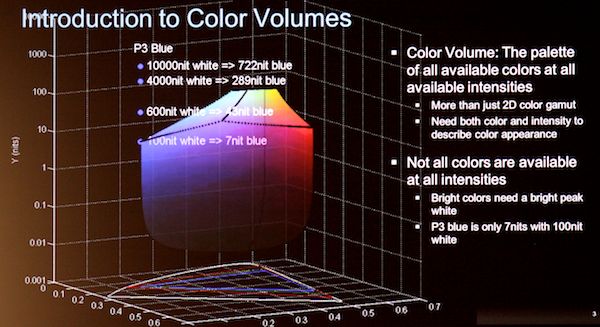

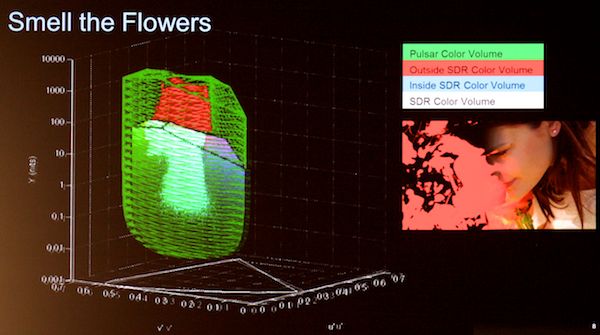

Robin Atkins: What’s a color volume? Normally, the CIE chromaticity chart. We’re used to drawing triangles for Rec.709, P3, etc. but those also have associated brightnesses:

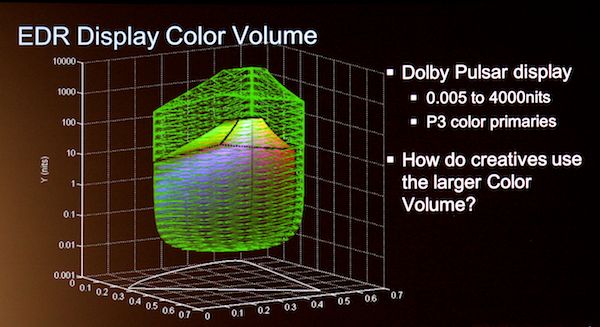

We can superimpose an extended dynamic range (EDR); here’s what the Dolby Pulsar dynamic range is:

We gave this to colorists; they use the EDR mostly for highlights (sky, sun, saturated colors, skies):

[one frame of an animation, showing the red/black color map sweeping across the image on the right, with red showing pixels that exploit EDR.]If the Pulsar EDR were even larger, colorists would have used it. Some elements still clip against the headroom, as colorists pushed for more.

Summary: instead of gamut, think about volume (including brightness), Bright colors need a bright white. Colorist will exploit the greater volume.

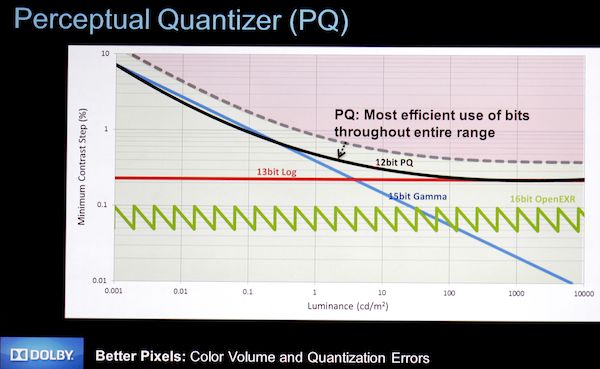

For a given color volume, what’s the required bit depth? Barten Ramp, ITU Report BT.2246 found a consensus threshold for visible contouring. OpenEXR format is well below this threshold for entire range up through 19K nits. With a simple gamma curve (BT1886), need 15 bits to stay under threshold, 13 bits for log; Perceptual Quantizer (PQ, Dolby’s own transfer curve) needs only 12 bits:

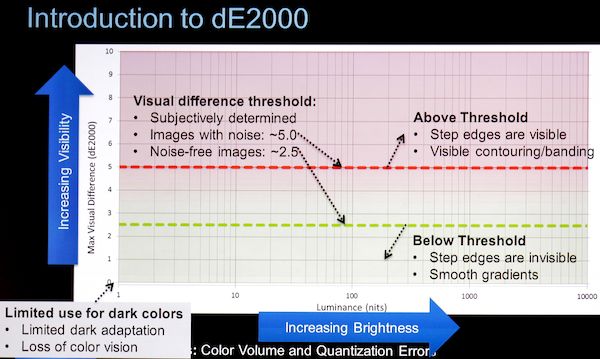

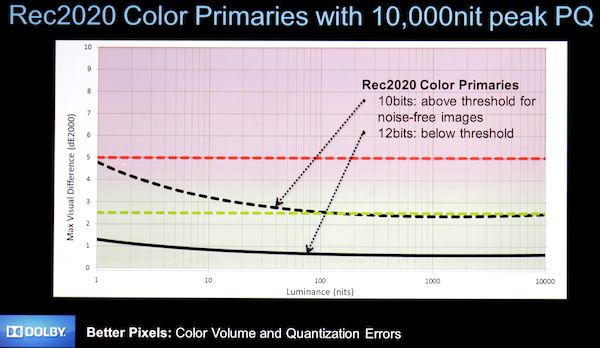

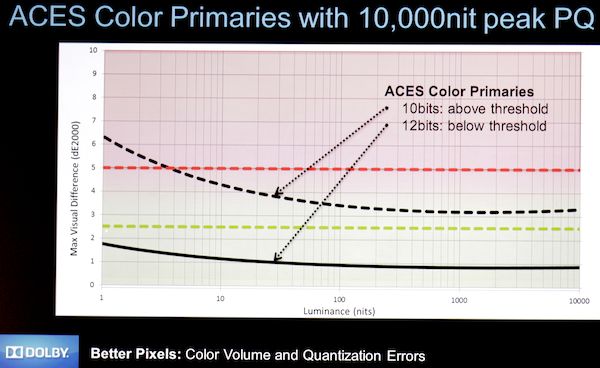

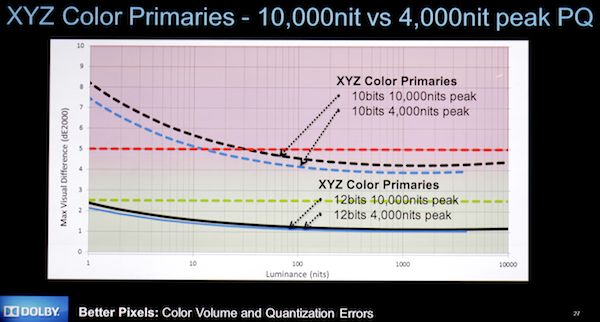

That’s for monochrome. For color, use a color-perturbation test, dE2000 metric:

What happens with PQ coding?

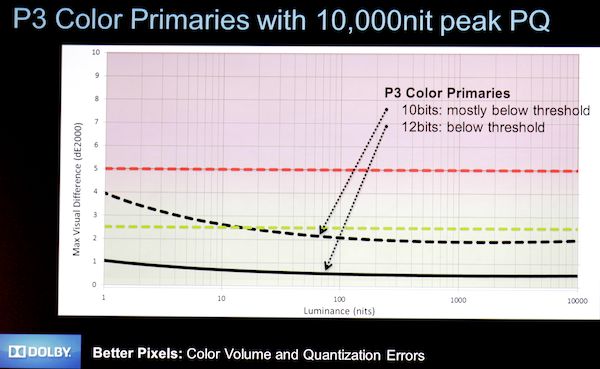

So PQ is the most efficient way to encode EDR. Color primaries affect the required bits: 10 bits for P3, Rec.2020 needs 12, also 12 for ACES and XYZ. Peak luminance has little impact. MovieLabs proposal for 10,000 nit peak, XYZ color, 12-bit PQ format.

Matt Cowan: Motion and brightness. Our 16 ft-lambert spec (open-gate cine projector) came from limiting flicker visibility. Better brightness increases creative palette.[reference throughout: SMPTE Conference 2012, Andrew Watson HFR session].

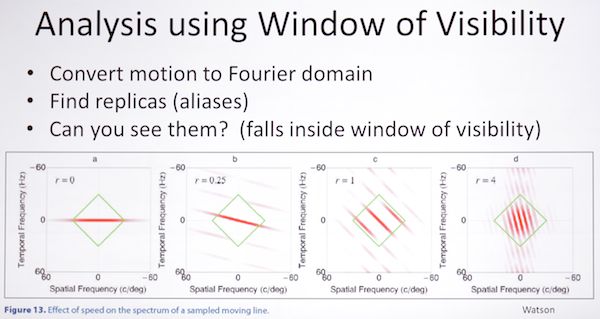

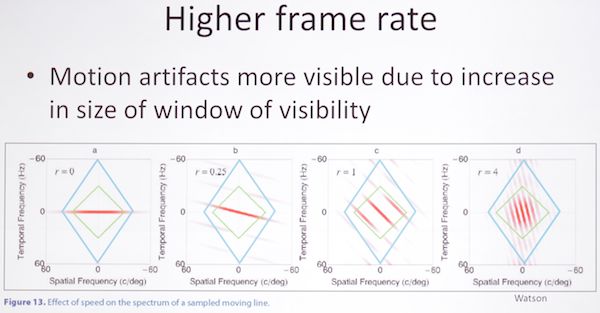

Issues: judder (images in “wrong” spot, as with double-flash projection), flicker. Watson talks about the Window of Visibility, model of visibility for motion artifacts. When you transform image into Fourier domain:

The diamond is the window of visibility; we only want to see one trace. The result: spatial acuity increases with increasing brightness, as does contrast sensitivity. Temporal acuity also increases with brightness.

What can we do? slow down motion, reduce average brightness, increase motion blur, reduce contrast, or… increase frame rate.

Masayuki Sugawara: Everyone talks about HDR, but disagreement on exact definition. Current problems: loss of detail in highlights, loss of saturation in highlights, hard to represent bright and dark areas, banding in 8-bit delivers.

Three layers of DR: device performance (DP), system standards (SS), tone mapping (OP, operational practice). Proposed requirements: significant improvement in user visual experience, reasonable cost, acceptable changes in current practice, satisfactory compatibility with current practices. Combination of increased brightness and increased headroom seems to be best approach. Major changes on operational practice, end-user’s practice. Can HDR master be automatically tone-mapped for current/legacy displays? How to we adjust for wow-factor vs burden on eyes (brightness-caused discomfort). How much headroom, how to implement?

Deeper bit depth: small change; Rec.2020 already 12 bit. More efficient quantization: large change from current practice; not desirable. Improved tone mapping: extend scene DR. Increase display max brightness as well? Use still-image HDR tone mapping approaches? Not proven; differences.

Conclusion: improvements needed; increase in headroom is major solution. To what extent; how to implement? What do you think about improved tone mapping as alternative approach?

Bill Taylor: DP and VFX supervisor; I work to make pictures easy and pleasant to watch. DP’s job is to overcome the limits of the display. Our job is to manage contrast in scenes. Three-stop brightness ratio (8:1) key/fill is subjectively unpleasant, so we fill with lights or bounce cards. Similarly, saturated colors may be beyond the reach of our tech; we test wardrobe and makeup to make sure it’s photographable. These new displays make it so we don’t have to work so hard to make something that’s comfortable to see.

Trumbull made Showscan, 60fps; strobing disappeared. Made DP’s life easier, we could see for the first time cross-screen motion just like reality. Now, 30+ years later, we’re just beginning to get this in the theater.

So the new tech is allowing much more freedom, in lighting, color, and motion. Tremendously exciting; unlimited expressive potential. Anyone can make a scene go wow!, but you can’t have two hours of wow!. A 2-person living-room drama doesn’t need HDR, 4K, HFR, but it’s horses for courses: imagine Lawrence of Arabia in HDR exhibition.

Pat Griffis: Rec.2020 still based on gamma. Gamma vs. PQ?

MS: Current gamma function is only for data compression, so alternates such as lossless coding can be used. But for TV, signals are coded. Brightness corresponds to WFM in current practice, very beneficial.

Pat: gamma vs PQ, no point in putting bits where we can’t see the, CRTs were gamma devices, but new displays aren’t. Digital Cine chose gamma of 2.6, but it didn’t embrace high brightness. It’s up to the standards bodies.

Q from Charles Poynton: Isn’t gamma a perceptual quantizer?

Thomas: as a perceptual quantizer it falls apart at high brightness.

???: Both log and gamma are subsets of PQ.

Mark: You can see 4K, but more bang for the buck in HDR and HFR. If I could influence the consumer electronics industry, I would!

Thomas: The panel makers are making 4K glass, so we’ll get it “for free”. We’ll have 8 million anemic pixels. It’s the balance between cost.

Bill: In terms of commercially acceptable HDR, color neg has enormous color depth. You can get higher res our of existing negs, and much grater DR than we’ve ever seen. Dig out the Lawrence of Arabia camera negs, make an HDR transfer.

Thomas: Film loses contrast sensitivity before it loses dynamic range, so it’s unclear if we can recover that.

John Sprung: remember early zoom-lens films with constant zooming, early stereo records with ping-ponging sound? Let’s not overuse this!

Virtual/Distributed Post

Program notes: “More powerful computing, cheaper storage and other technologies have enabled a range of on-location services once relegated to the post production facility. In this panel, we examine how to define distributed or virtual post, what its current limits are, and what technologies need to advance to improve its reach. The panel will also examine how other new trends in the industry (4K, HFR, ACES) impact virtual post and whether the brick and-mortar post house will become obsolete.”

Moderator: Debra Kaufman, Creative Cow

Jennifer Goldstein Barnes, DAX

David Peto, Aframe

Thomas True, NVIDIA

Guillaume Aubuchon, DigitalFilm Tree

Rich Welsh, Sundog Media Toolkit

Bill Feightner, Colorfront

DK: Not using the C-word, or as Josh puts it, “clown”. Virtual or distributed post can mean so many things…

BF, Colorfront: Virtual post, how are we involved? Started in 2000, provided all SW-based color correction, 2nd product run developed the de facto on-set dailies system. Grown into virtual-based platform that can run anywhere for transcoding, dailies, remote color correction.

DP, Aframe: A powerful cloud platform for collecting / distributing television footage. 5 years old, based in UK, we own / operate our own cloud.

TT, Nvidia: We make GPUs. Workstations vs SAN storage, we looked at moving application to where the data was, thus moving the GPU to the server farm, too.

JB, DAX: we’re in Culver City, the DAX platform is about getting material expediently to all stakeholders in a timely fashion.

RW, Sundog: SW company providing workflow platform, it’s virtual, bridges between clouds. We work with vendors on a job-by-job basis.

GA, Digital FilmTree: using hybrid virtual tech to help deliver TV and feature films.

DK: What is virtual post?

Bill: We’ve been in a serial workflow pattern, but now we’re in a parallel model. Leon posed the question, is post dead? No, but it’s changing rapidly into this parallel fashion. Post tasks are moving up into production or even pre-pro. Haven’t come up with a name for this yet; not pre/prod/post any more. Working as workgroups, the bulk of the work done concurrently.

DK: So we won’t call it post?

BF: It won’t be post. It’s still important but it’s moving upstream. It may redefine itself. Still have management of the job, that’s a strong role.

[Sometimes lost track of who is who, so you’ll see “??” for the speaker. Sorry! – AJW]

??: Customers are driving it. Distributed workflow is a response to that, to keep staff productive. We have to bring our own industry into this new world, especially as budgets shrink. Making better use of our teams.

GA: Pushing the services we’ve always offered to the customer. Moving the barriers. We do some TV shows where we deliver the goods to the customer’s site. We distribute all the computation and all those files to wherever they need to be.

JB: Need to get people working with the material as quickly as you can, remove the obstacles to getting material moved along. Have to move it into a collective space that people are comfortable with. How do we get to a place where we can all work together, where all the tools work together.

RW (?): We didn’t suddenly call it render farm post or SAN post when we moved stuff before. It doesn’t matter where the bits are as long as you can get it back, you don’t care where the processes take place as long as it’s safe and you know where it is. It’s not that different from working in a facility, but I never say that to customers as it scares the sh_t out of them.

DP: “Distributed” is the wrong word as it’s bringing people together. Fast turnaround / upgrading: you can get customers using stuff, and if there’s a problem you can upgrade right away.

Bill: People who are in these workgroups, we’re moving from a hardware-based to a service-based system. The differentiation comes back to the creative side, not what hardware you have. We provide tech to enable that to happen. It’s a different reincarnation, much more powerful and efficient. Iteration is much faster in a parallel fashion. Is software dead? Yes and no; sometimes SaaS works. On the other hand, we’ll have thicker clients, doing more locally, when bandwidth is lower.

GA: Sounds optimistic. When you engage a production in virtualized workflow, the selling point isn’t the utopian future, it’s budget line items. Things like having scalability, being able to have files anywhere. The truth is other industries have moved faster. You have to talk to the individual producer, not the studio.

BF: We’re not disagreeing, we’re not gonna see the transition just yet, but good seeds are being planted.

GA: Right now delivering virtual color correction, titling, conform over cloud, open stack, open storage. The way we deliver that storage is lots of storage replication, geo-co-location. The hey is to show ‘em how it’ll save ‘em money. There’s a bigger argument for centralization of data and the monetization, but now it’s getting at the showrunner, and addressing ‘em with cloud services.

Rich: My example is from distribution. When you get to localization and versioning, you get one show with 200 versions, then another one with only 150 versions… it’s not economically viable to spend CapEx to address those peaks. The value is to overflow that capacity into the cloud.

DP: Show “World’s Toughest Truckers”, 12 cameras per truck, all shot then uploaded, over 150 people working on the footage (Discovery Channel, Channel 5), then edited on Avid (with metadata), uploaded for approvals, done this for three years. It’s live, it works, it’s exciting. It’s hard to get as a concept, but when a team starts using it, you can’t get it away from them. Spreads by word of mouth.

Jennifer: Word of mouth is a lot. Lightbulbs go off when people see how easy it is to use. No one cares about the hardware as long as they can get their jobs done. The job for this connective tissue is to be simple, to work with all the other vendors.

Bill: All these different choices can be overpowering. “Just plug it together and it works” is the goal, but there are so many ways it can go wrong. It should just plug together. Images should come out of cameras and plug into this environment and it should just work. So many choices, add virtual to it and it’ll just fall on its face.

Question: How are you doing virtual online at DFT?

GA: What we did, we use OpenStack, open cloud. Swift storage running at DFT and at the shows, replicating. Resolve and Avid, Avid in a virtual environment on OpenStack. The key thing about Swift, replicating for speed. Use HTTP GET request, it’ll be fetched from wherever it exists. We were pursuing bandwidth for a long time. With OpenStack + custom software, not everything needs to happen immediately. An automated process handles intelligent replication and caching. Replicating different material at different times to work with lower bandwidth connections. People have worked 2000 miles from the server, using local copies and SSD caches.

Q: Security? MPAA audits, studio data centers, unskilled workers accessing storage…

GA: We bought into OpenStack’s Keystone authentication layer. Far more secure than things were before, because we know everyone accessing material (not possible with physical media shipping around). I can tell you right now who’s touching a file. The idea of federated identity, the studio can know who everyone is.

??: Working in this way you can have much more granular access rights. Dynamic watermarking. Security is tougher than in a physical environment. But it’s still new, what we need is a proper industry security framework, so we don’t keep inventing different things.

GA: A lot of these issues revolve around community. Federated identity, open auth layer that’s outside the internal app.

David: We do “Big Brother”, some of which is legally very sensitive. I was a producer for years, did stuff for Apple (imagine the security!). I want the provider to have total control, not farm it out. If we lost one gig of data or had it stolen, that’s the end of my business; that’s how seriously we take it.

DK: Is it just because hackers haven’t found us yet? Will all bets be off?

David: It’s the way the media is stored as IDs, so there’s nothing there; it’s striped over hundreds of drives; very hard to steal.

GA: Open source (OpenStack, CloudStack) is more secure because so many are using cloud that everyone has an interest in keeping it secure.

Bill: Monitoring: if there’s illicit activity, take care of it.

Thomas: We want to deliver a virtual desktop the same as the local desktop. We run the same app on the server, then use the GPU to send the desktop as an encrypted H.264 feed to the client. You’re basically looking at a movie of your application (Nvidia Grid). A different approach.

DK: What’s the next tech step?

GA: All replication will end: shoot it once, put it into the cloud, should be one singular arc. Just virtual manipulation of that file.

Jennifer: Some will need to work in sandboxes.

Bill: The essence of workflow planning is knowing which files and versions to preserve and sandbox; you’ll be storing several layers of the project.

GA: All the versioning happens, but only the metadata is stored.

Jennifer: We don’t have that yet, so it’s conjecture. I think you’re right, but we won’t know until we’ve done it. A lot of it is getting client feedback, getting experience. We will learn what we need to hold onto, what’s most efficient.

David: Hundreds of productions. We learned we didn’t have all the Avid keyboard shortcuts virtualized! Should we have a timeline in our app? “No, because then a producer will start editing.” “Yes, so we can see what we’ve got”.

Thomas: This is post, so as soon as you give ‘em a tool you get feedback. The biggest thing was that we needed to enable Wacom tablets, we needed to virtualize USB I/O. Tech challenges, 10- and 12-bit scan out of GPU, virtualized, encoded, then decoded on the client. Being able to adjust the color balance on the client.

Rich: Biggest problem isn’t scaling or adding people to a job, but getting a money person to sign off on it. Building systems to make that sign-off easier.

Q: What’s the biggest barrier to entry; why are people not doing it right now?

Rich: We can’t go fast enough, they are beating down the doors. The biggest issue is the comfort factor.

GA: We’re only 5 years from film workflow. It’s the pace of change; they’re just barely happy with file-based workflow.

Bill: This is disruptive, not just incremental. Throwing away concepts of limits, rethinking workflows and organization, restructuring around what’s possible technically.

??: Everyone is hugely busy, so getting their attention is hard. Making a change that could risk delivery of your next job is a big leap when you have something that works. Avid had a tipping point 1993/1994 as Leon said. Are we there yet with virtual? Probably not yet.

DK: The tipping point happens when some high profile show does it and it works.

Q: Usability of virtual desktops today, for things like sound syncing, frame accuracy?

Thomas: It’s just as if you’re sitting at your own desktop. A GigE connection should be fine.

Bill: Interactivity across distances how do we give that immediate experience locally. It’s how you distribute that processing. Locally, thicker clients can be used for critical stuff, like painting on a file, or doing color correction. Combination of caching, negotiating a connection. Making software that adapts to the different latency needs.

Rich: The biggest bugbear is buying equipment they’ll only use one month. There’s a lot of advantage to thin-client architecture even if it’s on-site.

Q: Uncompressed video or other high-bitrate stuff: what’s you’re preferred method to get it into the cloud?

GA: We run two private clouds. Ingest is to local cloud. It takes time. Then we replicate to public; we have partnership with RackSpace for public cloud. We get a drive from a DIT and plug it into our cloud.

David: 10GigE pipes, UDP acceleration built in, you’ll get 90-95% of your bandwidth. Robust pause/resume if the connection drops. Where bandwidth is constrained, we revert to proxy. You don’t always need the full-res.

Q: Workflow cost efficiency: no one will pay for data management, storage. Petabytes of original files; cost for loading and archiving?

GA: 100 TB for $5000. We just increase the size of the Swift cluster as needed. By use of Swift we’ve reduced client’s costs compared to SANS, big NAS boxes. Better product for lower cost.

David: Who makes money from offline? Nobody. The grade, the audio, the online, the VFX make money. From real productions: we’ve saved up to one week in seven due to reductions in required travel, 40% of editing time (logging, etc), 40% of storage cost, 80% on delivery costs. Moving physical media from one building to another building is completely pointless.

Rich: It’s a misconception that what we’re doing will eliminate post houses: you still want a facility. You’re changing how you use that space, using it for creativity instead of equipment. It changes the nature of the post house.

GA: I agree: we’re becoming a service provider.

David: Can’t replace having two people in a room cutting a show.

GA: That’s why you have to deliver services to the client’s edit suite.

Bill: Can’t replace having bricks ‘n’ mortar place with a big screen and a collaborative space. But if the client is in London, wouldn’t it be nice to have a facility-sharing coalition tied together with this tech, so you can remotely collaborate?

David: Producers don’t want to give up the ability to be “in the edit”, which means having a runner bring them sushi.

Q: Taking live material into your processes?

David: We know how to do it, just waiting for a customer. And a camera vendor is working on enabling technology.

GA: Most of the folks we work with aren’t opposed to that workflow.

Q: What type of indemnification are your clients requiring for their security?

David: No one is asking yet, because we never look at your content. And anything that could be a problem is reversible, like file deletion. We will give you the tools, but you use your own protocols to ensure security.

GA: We also use soft delete so nothing really vanishes.

Will post go away? No, it’ll change to a services and consulting business. Being flexible. From “I’m in the Flame suite” to “I’m with the best colorist in the world”.

Breaking the Model

Program notes: “Technology is at the heart of explosions of creativity in every aspect of the entertainment industry. Even words like film, television, post-production, production, archiving are being re-defined. This positive disruptive force challenges all of us to be clever, agile, and constant problem solvers. This panel gathers experts in animation, games, archiving, and advanced technology to demonstrate and discuss this transformative time.”

Moderator: Kari Grubin, NSS Labs

Cynthia Slavens, Pixar Animation Studios

Wendy Aylsworth, Warner Bros. and SMPTE

Andrea Kalas, Paramount Pictures

Karon Weber, Xbox

KG: What are the CyberSecurity considerations in this new world? Think about the Target attack. The people making those attacks are getting smarter; they got in through the HVAC vendor, using a phishing attack. It didn’t matter where they got in, they had malware that went looking for point of sales info. I used to own a post house; I’d get woken up by the HVAC company saying the machine room is too hot, so I could have been infiltrated the same way. DDOS attacks: what if you’re a digital asset portal? Content creation is global; the threat landscape changes based on location. Just be aware of that. Post teams and IT teams need to learn how to work together.

CS: I head up mastering group at Pixar. Also have a hand in calibrating displays, in-progress DCPs, color correction, processing, deliverables. Exciting time in our processes: “what is still post?” There’s very little that’s “post” in our everyday lives. It all speeds up, the window shrinks, everyone wants it all faster. What’s available to us now: HFR, HDR, etc. We want to affect those changes upstream. If a new tech is available it has to make sense creatively. HDR is definitely one of those things we’re interested in. Lighting dept is interested, but it works farther upstream, into art dept. When your production cycle is 4 years, new tech becomes legacy tech really quickly! 2009’s “Up!”, in 3D, for the director it was important to augment the story. Months of testing on Toy Story 3 in IMAX. We have 14 movies we can test with, we have sequences that are “encoder busters”, for example.

WA: Three points: dichotomy between content mobility and quality; need for clear approach for the consumer if we go to a new format; being smart distributing to the home. Mobility vs quality, we get a plethora of mobile formats, yet we’re being pushed to HFR. HDR, 4K, immersive audio, 3D, etc., file sizes 12x size of HD files. IMF is out now, Warner Bros uses IMF extensively. New SMPTE task force: “File formats and media interoperability”. I’m not sure that all these formats are actually helping. We need to learn how to lop off the old formats and move forward to new, better formats (I’m all for dropping fractional frame rates [applause]). UV has its own file format. Next-gen quality, have to experiment to see what works, what consumers will accept, but you have to make a big jump to avoid confusion. Need to move up in res, frame rate, etc all at once. On the distribution side, needs to be driven by consumer. Blu-ray looks better on a 4K TV than on an HDTV. 4K content at 15mbps HEVC doesn’t look great, but an HD image at 5-10Mbps will likely look better. How do we make the best use of the pipes to the consumer, not just focusing on the biggest resolution.

AK: I’m a film archivist. A colleague says we shouldn’t call anything captured digitally to be called “film”. But “film” has cultural weight. We’ve spent the last 2 years figuring out how to save digital files as effectively as putting film on a shelf. Building internal infrastructure for preserving assets. “The Pillars of Digital Preservation.” The big secret? It’s really boring! Tons of detail work: verification, replication, regular health checks, geographic separation. One system for preservation and distribution; that way we can handle release formats of the future.

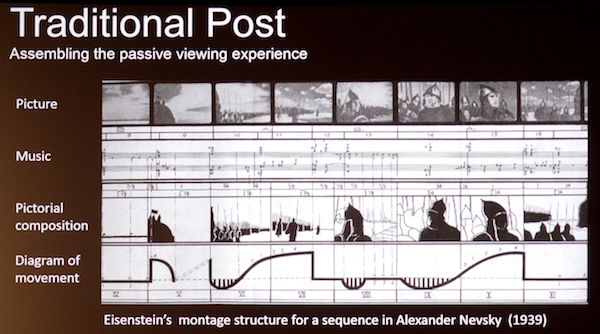

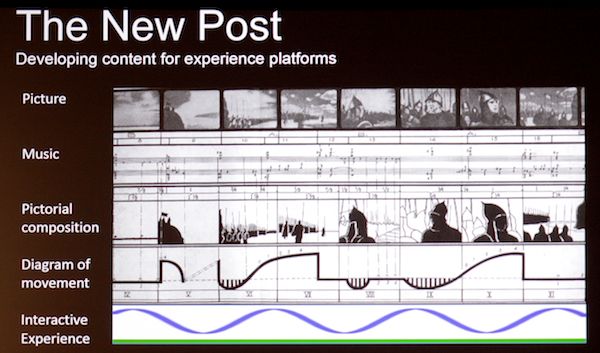

KW: Developing content for experience platforms. I cut my teeth on CMX, editing quad.

Thee types of Xbox content: third-party broadcast TV, 2nd-party (NFL feed, etc,) and 1st party Microsoft content. 3rd party original form of consumption. XBox Snap, a sort of onscreen 2nd screen. 1st party content (games), designing entertainment content which maximize tech capabilities (force feedback, user-generated gameplay). 2012 Presidential Debates with interactive Xbox voting.

Toolkits for developers: SmartGlass (synchronized 2nd screen on phone/tablet). XBox hardware 1080p30 HD camera, enhanced IR, Kinect. Illumiroom

[no discussion of this; out of time]

360-degree 13k Using Only Archival Footage – Frédéric Lumiere, Lumiere Media

I was the CIO of Hooked on Phonics and then I moved to Hollywood and became an extra; that’s how I learned how to do filmmaking. Someone came to me, can we do a 360º film? Sure! “Normandy’s 100 Days” is the film, Theater in France, on D-Day’s Gold Beach, has 9 screen surrounding audience. Current 360º film running for 22 years, was a full-time job… until they went digital: now the entire job is pushing a button on an iPad. The specs for the new film: ARRO 360, 13K wide, 9 HD screens side by side:

Workflow: selects in FCP; average of 5 unique clips playing at a time (some get replicated or split across multiple screens) , average 7 seconds x 20 minutes, Edit in After Effects, output as DPX, 9x Animation clips encoded to MPEG-2. Sound mix is 9.2.1. We pushed AE so hard: there’s a limit of 1000 layers if you want it to display! Challenges: action has to move synchronously across screens. 9 months of research across 6 countries. 10 Robert Capa photos taken on the beach; incredibly hard to license. I convinced them that these 10 photos are the perfect 360º composition, and they let me do it. [Trailer available on YouTube]

Too Much Geek Speak – Randall Dark, Randall Dark Productions

For the last 25 years I’ve been addicted to HD resolution. Now you’ve given me 4K crack!

I started in theater in Canada. I learned if you don’t market, no one shows, you’re dead. If you don’t market accurately, they won’t come back.

In 1986 I made the move into HD. In the early years of HD, confusion about standards. I worked at ATSC test lab. Fast-forward to late 1990s, I get frustrated with geek speak: the objective defining of technology. Shot “Jaguar”, combining interlaced HD with 35mm.

At times we’re frustrated by being told “you need more res”, “if you don’t shoot with 4K you won’t have clients”, etc. For a lot of things you don’t need 4K, for docco you worry about storage. I decided to shoot a doc with 20 different camera types, including a 4K wide shot [that little JVC 4K camera? He didn’t say]allowing push-in; some little POV cams, DLSRs, F3, even 1080p video-recording sunglasses. Not one person was bothered by the different looks: the story worked.

Use the tools, don’t get pressured by the numbers. Hollywood is a separate world, a separate planet; the rest of America isn’t worried about 4K. When is it “good enough”? At what point do we have enough; when is the tech too much? What is the business model? What’s the future for the independent filmmaker? 95% of The Film Bank’s business is 1080P.

When we push a tech, it has a ripple effect. We’re pushing 4K, but most installed digital projectors are 2K. For the last 20 years, I’ve been going into CE stores, looking at the content I had created for the TV vendors. I’d talk to the sales people, lots of confusion. I went into a CE store just now, saw sign everywhere: “4K Ultra HD TV – Four times clearer than HD”. OK, what does that mean to the consumer? Had a 1080P TV and a 4K TV side-by-side, fed OTA TV to both, looked at it, but we didn’t see 4x clearer images! My concern is we’ve alienated our consumer base. We told ‘em to buy 3D, now it’s 4K. How are we marketing this? If we say it’s 4x better and the demos show it isn’t, that’s a disservice, we disenfranchise the people we’re selling to. We aren’t giving accurate info to the consumer, and it’s the consumer that’s keeping us in business! In games, what counts: 99% say frame rate, not res, not color. “There’s no way I’m taking a 4K TV unless it refreshes fast enough.” Gamers don’t see any advantage to current 4K TVs with 30fps maximum.

Q: For the multicam docco, how did you cut it?

A: Transcoded everything to ProRes and cut it that way.

Q: “4x clearer than HD” is the wrong message, What should they say?

A: To have bold statements that are hard to substantiate is the wrong way. I don’t come up with the catchphrases; just don’t over-promise. Don’t use geek speak to talk to the consumer.

Accessing Encrypted Assets Directly in MacOS – Mathew Gilliat-Smith, Fortium Technologies

Always security concerns. MPAA doesn’t like to publicize breaches, but they happen. Concerns with computers connected to the ‘net, cloud workflows, proxy files. NBC Universal identified a specific risk in pro editing systems and designed MediaSeal encrypted system.

MacOS doesn’t support encrypted files, and the solution had to be cross-platform. How to create reliable end-to-end encryption? Must be centrally managed, file agnostic, no altering of file, useable on closed networks and in the cloud, complementary to existing systems. Created a file system filter driver (HSMs and virus detectors run this way) at the kernel level. Facilitated on Windows, not on Mac, but we did it anyway. Sits between the storage and the user level, lets unencrypted files pass unimpeded, handles and controls access to encrypted files: requires authentication (by dongle, password, and key database), only decrypts on-the-fly into memory, never puts unencrypted data on disk.

Core element: Database key server, encryption server, decryptor license + iLok key. Key server allows encoding, forces strong passwords, allows setting access times/dates, add/remove approved users. Encrypts in seconds, roughly file-copy time. Uses AES encryption. User has to enter password to decrypt and use, but after that access is seamless. If you yank the iLok dongle, you get a full-coverage screen lock if files are being used, until you re-insert the iLok. Full reporting audit trail.

Cloud workflow: fully integrable with asset control. MediaSeal FSFD can be used locally or in the cloud. API for 3rd parties: encryption, FTP delivery, editing / authoring systems, scriptable through command line. www.mediaseal.com

Q: Thought of using biometrics? iLoks and passwords can be stolen.

A: We use a three-prong method and think that’s robust.

Q: What happens if you have software that can export files, like Quicktime Pro?

A: There’s an auto-encrypt process; if a user tries to save a copy, or render a timeline, it gets automatically encrypted.

Q: List of allowable / disallowed software?

A: Yes, there’s a blacklist.

Q: How do you deliver an unencrypted version?

A: It’s up to you, you can keep it all encrypted or allow copies in the clear.

Q: Any issues with export control given your encryption tech? What happens when Apple changes the OS?

A: We went from QT7 to QT X; we think the architecture will survive OS upgrades. No quick answer on export control.

The Evolving Threat Landscape: Current Security Trends in the Entertainment Industry –

Steve Bono, Independent Security Evaluators

New trends for security practices:

Security separate from functionality. The two often live together in the IT dept, There’s pressure for functionality, rarely for security, and the people making the decisions may not be the right ones. Solution: security should be split off from IT. That makes the conflicts clear, and conflict is good: it gets solved, not ignored.

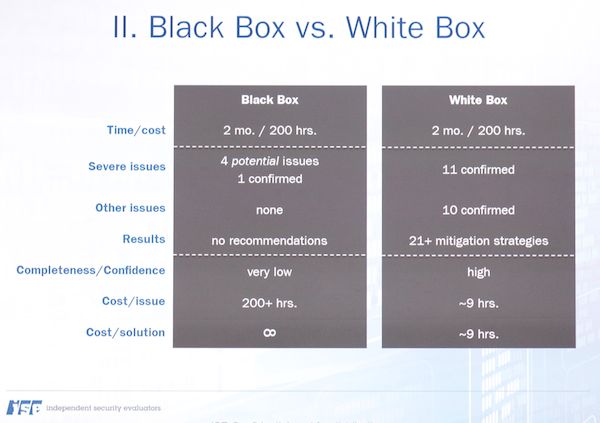

Black box penetration testing considered good: not the sort of thing you should be asking for, white box vulnerability assessments are better. (Black box is blind testing, white box lets you see what’s going on, and going wrong.) A penetration test is a pass/fail test. Vulnerability assessments are holistic views of all the problems you find, more accurate risk assessment. Case study:

Secure assets, not just perimeters: modern attacks require defense in depth. Otherwise you have a hard candy shell with a soft, gooey center. Flash drives, other malicious peripherals, BYOD, email, malware: all circumvent perimeter defenses. Use internal data silos, short-expiration cookies, etc.

Built it in, not bolt it on: security is best when built in from the ground up. A bug found in requirements is 10x+ easier to fix than if it’s found later. The cost of the security itself is marginally cheaper to build in than bolt on, but the cost of mitigating issues is 25x longer to repair in apps if bolted on, 300x in infrastructure (time to fix in bolt-on vs. time to assess in the built-in case).

Security as an ongoing process: do it early and often. New attack types every day, new threats. The more frequent your security assessment, the lower the vulnerability, and it costs less, too: the lower the amount of change between assessments, the lower the learning-curve costs. Quarterly testing winds up costing less than yearly testing. (Bi-yearly slight cheaper, too, but with longer windows of vulnerability.)

Q: Is there a provision against theft from trusted employee?

A: Policies and procedures work hand in hand with technical methods.

Q: Recommendations for home machines? Anti-virus software?

A: Practice same diligence as you would at the office. Individuals get targeted, too. Do use an anti-virus program.

Q: Any way to mitigate DDOS attacks?

A: No real way. There are techniques that make these attacks harder, but they have a problem telling a DDOS attack apart from a “flash crowd” traffic load.

What Just Happened? – A Review of the Day by Jerry Pierce & Leon Silverman

“Ultra Tech Retreat: 4x better than NAB”! We have more women here this time, even that last panel with no men on it. “We’re forming a new post house in our bedroom, it’s called Bed Post.” A new word for post? It’s “production”. The word “movie” is juvenile, “motion picture” is so old fashioned. What should we call it? Theatrical features? Cinema? Film? Movies? [no consensus]

HPA Tech retreat 2014 full coverage: Day 1, Day 2, Day 3, Day 4, Day 5, wrap-up

Disclosure: HPA is letting me attend the conference on a press pass, but I’m paying for my travel, hotel, and meals out of my my own pocket. No company or individual mentioned in my coverage has offered any compensation or other material consideration for a write-up.