The annual HPA Tech Retreat is over; here’s my quick summary of trends that stood out, as well as a few interesting images.

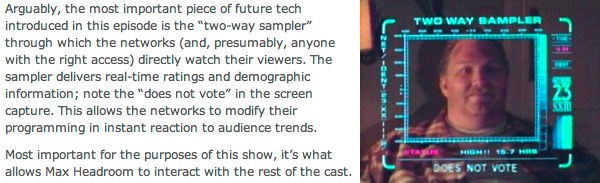

Chasing viewer engagement / sampling / metrics: Real-time social-media monitoring. Set-top boxes delivering customized ads. Live user-engagement feedback into production practices. There has always been a desire for detailed audience measurement; now it seems the technology is able to deliver.

20 Minutes into the Future? More like 1985, at least for the concept:

Don’t worry about the NSA, the NSA doesn’t really care about you. The entertainment industry and the ad industry care very very much about you: what you’re watching, what you’re thinking, who you’re talking to, what you’re buying.

Beyond that, if programming decisions get influenced in real-time by audience metrics, what would (will?) we wind up with? “Wall-to-wall Honey Boo Boo reality shows”, said my friend Paul Turner. He’s not very cheerful about the aesthetic and dramatic implications of crowdsourcing creative choices on a cut-by-cut basis.

But that may simply be beside the point: remember that there’s a reason it’s called “show business”, not “show art”. “House of Cards” may win Emmys, but over at UFC, it’s mixed martial arts that pays the bills: second only to soccer in worldwide popularity if UFC is to be believed. The mind boggles.

Moving beyond 4K: Look, we’re going to get 4K, whether we need it or not; 4K is an easy expansion of existing technology that doesn’t push any of our production and distribution ecosystem beyond its current core concepts. At its simplest level, as Poynton and Watkinson explained, 4K capture and 4K display with an HD pipeline between the two yields visible benefits at minimal cost (just as Microsoft and other argued during the HD / DTV transition in the Grand Alliance days that many of the benefits of HD can be realized using SD transmission, as anyone who watches DVDs of Hollywood films via a good upscaling player on an HDTV can attest).

But the exciting stuff is better pixels, not just more of ‘em. We’re finally talking about moving beyond a “display referenced” world where our image capture, image coding, and processing chains are optimized for the limits of film projection and CRT displays, to a “scene referenced” world where we aim to take in, encode, and process as much of the original scene content as possible (think high frame rates, high dynamic range, wide color gamut). Only when necessary will we then mash things down to accommodate the limits of a display technology, but those limits are rapidly being pushed outwards.

High dynamic range displays, like the Dolby Pulsar in the demo room, allow for far more realistic tonal-scale portrayal than existing practice allows. The Pulsar display is 40 times brighter than a standard reference monitor (4K nits vs 100 nits), and the talk at the Tech Retreat was of extended dynamic range EOTFs that encompass 10K nits. In the clip shown, the polished aluminum skin of that aircraft sparkled like the real thing, with intense (pun intended) specular reflections.

There were always crowds ‘round this demo. People carp about whether 4K is any advancement over HD, or whether the punters can even tell HD apart from SD, but there was no such argument here: as with the high-dynamic-range projection demo last year, this display was clearly and obviously a step beyond what we’re all used to watching.

Using something like Dolby / MovieLabs Perceptual Quantization coding lets us carry that extended dynamic range all the way through delivery; a tone-mapping function is used to allow for lesser displays to optimally render an EDR image, probably by maintaining contrast in the midtones and aggressively S-curving the highlights, just as we currently do in post when we grade 14-stop images to fit the roughly 6-stop extent of our standardized, 100 nit reference displays.

Wider color gamuts (Rec.2020) help too, and Gary Demos goes a step further (as he often does; he’s usually a step or five ahead of industry practice) by suggesting we capture spectral information about the capture device and conforming it to the spectral distribution of the display device — taking into account the characteristics of the observer, the monitor, and the ambience in the grading suite — so that the end viewer sees something as close as possible to what the director intended.

Suggestions made during the Tech Retreat that we move to higher frame rates, especially for image capture, met with widespread approbation and applause, with only a couple of dissenters defending 24fps as The One True Frame Rate (after one such debate in Monday’s session, I went up to John Watkinson and quietly said, “the problem is, you’re trying to argue against religion by using science.” He just smiled, and shook my hand).

All of these — HDR, WCG, and HFR — require fundamental changes in our entire production infrastructure. That these things are being discussed with calmness and equanimity at the Tech Retreat tells me that we may well see such changes diffuse into the wider world, probably some time in the next five to ten years.

Ethernet replacing SDI: 4K connectivity is a mess: do we use four SDI connections, or two 3G-SDI connections, or one 6G-SDI connection? Depending on what gear you’re using, the answer is “yes”. But even there, that only gets you to 30p; for 60p, you need twice that bandwidth. Belden may be able to make us 12G-SDI cables, but the cost advantages and routability of Ethernet will likely win, and sooner than you might think. Presentations and panels on software-defined video networking, networked facilities, and even IP-based live production were backed up with practical demos in the demo room, with SMPTE standards underlying the technology, and interoperability increasing rapidly.

Three years ago I wired a production facility for 10GigE and 3G-SDI: data feeds and video feeds to both stages and every important room. If I were to do that three years hence, I’d almost certainly leave out the SDI and just have converter boxes ready to deploy, to flip between baseband SDI and packet-based transport where necessary. (Today we’re right at the tipping point; I’d probably pull SDI alongside IP, but I’m not sure which way I’d go… but my bets are on SMPTE 2022 being the way of the future.)

The cloud wins: Only three years ago, Tech Retreat panelists were saying it was preferable to send a Digibeta tape across town by messenger than deal with file-based workflows, once you included the time spent in backup, duplication, and verification passes. A year or two before that, they were saying it was quicker to send a FireWire drive across town (in L.A. rush-hour traffic, no less) than to try to transfer the files it contained over high-speed Internet connections.

This year? Internet transfers and cloud-based collaboration are the norm; while sending physical media from location to post in a messenger pouch is still done, it’s in the process of being moved into a IP-based world as soon as the card comes out of the camera… or even before, with talk of proxies (at least) being uploaded wirelessly as they’re being shot.

Images

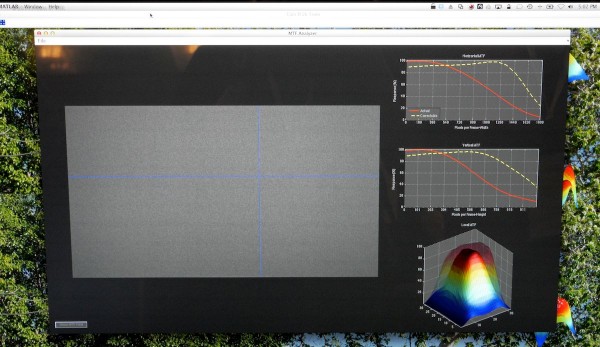

Tessive's MTF correction demo (click for larger image). Blue crosshairs show the point being measured.

Tessive founder Tony Davis, having sold off his Time Filter to “an unnamed camera company”, showed an automatic MTF measurement and correction demo: your camera shoots a carefully-calibrated “noise” chart, and his software will measure the H and V modulation transfer functions (spatial frequency responses) of the camera/lens combo at every point across the sensor. He can then generate an appropriate correction — basically, a very smart sharpen filter — to enhance the image without adding lots of nasty artifacts. Very clever! It’s not a real product yet (and may or may not become one), just a demo running in MATLAB.

Canon was showing a very nice 32″ 4K display (it had better be very nice, it’ll be $33K when it ships). They were using several to demonstrate a full ACES workflow with the C500.

One thing that impressed me was the modal control panel, switchable from standard “video” controls (sharpness, chroma, brightness, contrast) to CDL grading controls (power, saturation, offset, and slope; essentially lift/gamma/gain and saturation). Makes quick one-light grading (and CDL generation) very fast indeed.

HPA’s Executive Director Eileen Kramer is retiring from full-time HPA work, though she will remain in charge of the Tech Retreat. The Wednesday dinner was pressed into service as the Eileen Kramer Appreciation Dinner, and many HPA folks spoke eloquently about how Ms. Kramer has been Den Mother / the only adult in the room / the person holding the HPA together / etc.

Not being an HPA member, I can’t personally attest to her tenure there, but I can say that the Tech Retreat is probably the best run and most enjoyable conference I’ve ever attended. Huzzah, then, for Ms. Kramer!

HPA Tech retreat 2014 full coverage: Day 1, Day 2, Day 3, Day 4, Day 5, wrap-up

Disclosure: HPA let me attend the conference on a press pass, but I paid for my travel, hotel, and meals out of my my own pocket. No company or individual mentioned in my coverage has offered any compensation or other material consideration for a write-up.